Diplomats and military experts from more than 90 countries gathered in Geneva in April 2015 for their second meeting on "lethal autonomous weapons systems," also known as fully autonomous weapons, or more colloquially, killer robots. Noted artificial intelligence expert Stuart Russell informed delegates the AI community is beginning to recognize the specter of autonomous weapons is damaging to its reputation and indicated several professional associations were moving toward votes to take a position on the topic.

On July 28, 2015, more than 1,000 AI professionals, roboticists, and others released an open letter promoting a "ban on offensive autonomous weapons beyond meaningful human control."

Thus, it is timely and appropriate thatCommunications is focusing on fully autonomous weapons, particularly the call for a preemptive prohibition on their development, production, and use as has been made by the Campaign to Stop Killer Robots, many in the AI community, more than 20 Nobel Peace Laureates, and many others.

There is no doubt that AI and greater autonomy can have military and humanitarian benefits. Most of those calling for a ban support AI and robotics research. But, weaponizing fully autonomous robotic systems is seen by many as a step too far.

The Many Reasons to Ban Fully Autonomous Weapons

The central concern is with weapons that once activated, would be able to select and engage targets without further human involvement. There would no longer be a human operator deciding whom to fire at or when to shoot. Instead, the weapon system itself would undertake those tasks. This would constitute not just a new type of armament, but a new method of warfare that would radically change how wars are fought, and not to the betterment of humankind.

Many in the AI community have focused on the serious international security and proliferation concerns related to fully autonomous weapons. There is the real danger that if even one nation acquires these weapons, others may feel they have to follow suit in order to defend themselves and to avoid falling behind in a robotic arms race.

The open letter signed by some of the most renowned AI experts stated, "If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable ... We therefore believe that a military AI arms race would not be beneficial for humanity. There are many ways in which AI can make battlefields safer for humans, especially civilians, without creating new tools for killing people ... [M]ost AI researchers have no interest in building AI weapons—and do not want others to tarnish their field by doing so, potentially creating a major public backlash against AI that curtails its future societal benefits."

There is also the prospect that fully autonomous weapons could be acquired by repressive regimes or non-state armed groups with little regard for the law. These weapons could be perfect tools of repression and terror for autocrats.

Another type of proliferation concern is that such weapons would increase the likelihood of armed attacks, making resort to war more likely, as decision makers would not have the same concerns about loss of soldiers' lives. This could have an overall destabilizing effect on international security.

For many people, these weapons would cross a fundamental moral and ethical line by ceding life and death decisions on the battlefield to machines. Giving such responsibilities to machines in such circumstances has been called the ultimate attack on human dignity. The notion of allowing compassionless robots to make decisions about the application of violent force is repugnant to many. Compassion is a key check on the killing of other human beings.

In my extensive engagement with a variety of audiences on this issue, it has been striking how most people have a visceral negative reaction to the notion of fully autonomous weapons. The Martens Clause, which is articulated in the Geneva Conventions and elsewhere, is a key provision in international law that takes into account this notion of general repugnance on the part of the public. Under the Martens Clause, fully autonomous weapons should comply with the "principles of humanity" and the "dictates of public conscience." They likely would not comply with either.

There are serious questions about whether fully autonomous weapons would ever be capable of complying with core principles of international humanitarian law (IHL) during combat, or international human rights law (IHRL) during law enforcement operations, border control, or other circumstances. There is of course no way of predicting what technology might produce many years from now, but there are strong reasons to be skeptical about compliance with international law in the future, including the basic principles of distinction and proportionality.

Could robots replicate the innately human qualities of judgment and intuition necessary to comply with IHL, including judgment of an individual's intentions, as well as subjective determinations? Compliance with the rule of proportionality prohibiting attacks in which expected civilian harm outweighs anticipated military gain would be especially difficult as this relies heavily on situational and contextual factors, which could change considerably with a slight alteration of the facts.

There are also serious concerns about the lack of accountability when fully autonomous weapons fail to comply with IHL in any particular engagement. Holding a human responsible for the actions of a robot that is acting autonomously could prove difficult, be it the operator, superior officer, programmer, or manufacturer.

Scientists and military leaders have also raised a host of technical and operational issues with these weapons that could pose grave dangers to civilians—and to soldiers—in the future. A particular concern for many is how "robot vs. robot" warfare would unfold, and how devices controlled by complex algorithms would interact.

Taken together, this multitude of concerns has led to the call for a preemptive prohibition on fully autonomous weapon systems—a new international treaty that would ban the development, production, and use of fully autonomous weapons, and require there is always meaningful human control over targeting and kill decisions.

Rapid and Growing Support for a Ban

Over the past two years, the question of what to do about fully autonomous weapons has rocketed to the top ranks of concern in the field of disarmament and arms control, or what is now often called humanitarian disarmament. Within this short period of time, world leaders including the Secretary-General of the United Nations, the U.N. disarmament chief, and the head of the International Committee of the Red Cross, have expressed deep concerns about the development of fully autonomous weapons and urged immediate action.

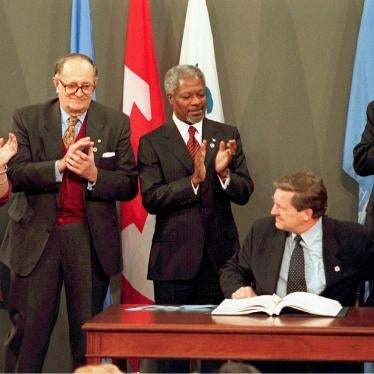

The Campaign to Stop Killer Robots—an international coalition of nongovernmental organizations (NGOs)—was launched in April 2013 calling for a preemptive ban on fully autonomous weapon systems. Coordinated by Human Rights Watch, it is modeled on the successful civil society campaigns that led to international bans on antipersonnel landmines, cluster munitions, and blinding lasers. A month after the campaign launched, the U.N. special rapporteur on extrajudicial killings presented the Human Rights Council with a report that echoed many of the campaign's concerns and called on governments to adopt national moratoria on the weapons.

In October 2013, more than 270 prominent engineers, computing and artificial intelligence experts, roboticists, and professionals from related disciplines issued a statement calling for a ban. This was organized by the International Committee for Robot Arms Control (ICRAC), which was founded in 2009 by roboticists, ethicists, and others. As noted earlier, in July 2015 more than 1,000 AI experts signed an open letter supporting a prohibition; the letter was organized by the Future of Life Institute.

During 2014, the European Parliament passed a resolution that calls for a ban, more than 20 Nobel Peace Laureates issued a joint statement in favor of a ban, and more than 70 prominent faith leaders from around the world released a statement calling for a ban. A Canadian robotics company, Clearpath, became the first commercial entity to support a ban and declare it will not work toward the development of fully autonomous weapons systems.

Governments have become seized with the issue of fully autonomous weapons since 2013, though few have articulated formal policy positions. Most importantly, the 120 States Parties to the Convention on Conventional Weapons (CCW) agreed in November 2013 to take up the issue, holding meetings in May 2014 and then again in April 2015. In the diplomatic world, the decision to take on killer robots was made at lightning speed.

It appears certain that nations will agree to continue their CCW deliberations next year, though questions remain about the nature, content, and duration of the work. A small but growing number of states have already called for a preemptive ban, while most participating states have expressed interest in discussing the concept of meaningful human control of weapons systems, indicating they see a need to draw a line before weapons become fully autonomous.

The April 2015 CCW experts meeting was by far the richest, most in-depth discussion held to date on autonomous weapons. Not a single state said it is actively pursuing them yet the week featured extensive discussion about the potential benefits of such weapons.

The U.S. and Israel were the only states to explicitly say they were keeping the door open to the acquisition of fully autonomous weapons but there are plenty of indicators there are many states that are contemplating them. Without question, many advanced militaries are rapidly marching toward ever-greater autonomy in their weapons systems, and there are no stop signs in their path.

Why a Ban Is the Best Solution

Some oppose a preemptive and comprehensive prohibition, saying it is too early and we should "wait and see" where the technology takes us. Others believe restrictions would be more appropriate than a ban, limiting their use to specific situations and missions. Some say existing international humanitarian law will be sufficient to address the challenges posed.

The point of a preemptive treaty is to prevent future harm and with all the dangers and concerns associated with fully autonomous weapons, it would be irresponsible to take a "wait and see" approach and only try to deal with the issue after the harm has already occurred. Once developed, they will be irreversible; it will not be possible to put the genie back in the bottle as the weapons spread rapidly around the world.

The notion of a preemptive treaty has been done before. The best example is the 1995 CCW protocol that bans blinding laser weapons. After initial opposition from the U.S. and others, states came to agree the weapons would pose unacceptable dangers to soldiers and civilians. The weapons were seen as counter to the dictates of public conscience and nations came to recognize their militaries would be better off if no one had the weapons than if everyone had them. These same rationales apply to fully autonomous weapons.

While some rightly point out that there is no "proof" there cannot be a technological fix to the problems of fully autonomous weapons, it is equally true there is no proof there can be. Given the scientific uncertainty that exists, and given the potential benefits of a new legally binding instrument, the precautionary principle in international law is directly applicable. The principle suggests the international community need not wait for scientific certainty, but could and should take action now.

Fully autonomous weapons represent a new category of weapons that could change the way wars are fought and pose serious risks to civilians. As such, they demand new, specific law that clarifies and strengthens existing international humanitarian law.

A specific treaty banning a weapon is also the best way to stigmatize the weapon. Experience has shown that stigmatization has a powerful effect even on those who have not yet formally joined the treaty, inducing them to comply with the key provisions, lest they risk international condemnation. A regulatory approach restricting use to certain locations or to specific purposes would be prone to longer-term failure as countries would likely be tempted to use them in other, possibly inappropriate, ways during the heat of battle or in dire circumstances. Once legitimized, the weapons would no doubt be mass produced and proliferate worldwide; only a preemptive international treaty will prevent that.

The call for a ban on development of fully autonomous weapons is not aimed at impeding broader research into military robotics or weapons autonomy or full autonomy in the civilian sphere. It is not intended to curtail basic AI research in any way. Research and development activities should be banned if they are directed at technology that can only be used for fully autonomous weapons or that is explicitly intended for use in such weapons.

Conclusion

While there are at this stage still many doubters, my experience leads me to conclude a preemptive ban is not only warranted, but is achievable and is the only possible approach that would successfully address the potential dangers of fully autonomous weapons. However, the involvement, advice, and expertise of the AI community are needed both to get to a ban and to ensure it is the most effective ban possible.

The AI community has an important role to play in bringing about the ban on fully autonomous weapons. This is not a political issue to be avoided in the name of pure science, but rather an issue of humanity for which we are all responsible.