LAION, the German nonprofit organization that manages a dataset used to train popular artificial intelligence (AI) tools, has removed from its dataset some children’s photos that were secretly included and misused to power AI models that in turn could generate photorealistic deepfakes of other children.

The move follows the Stanford Internet Observatory’s December 2023 report that found known images of child sexual abuse in LAION’s dataset, as well as Human Rights Watch’s recent investigations that found personal photos of Australian and Brazilian children secretly scraped into the dataset.

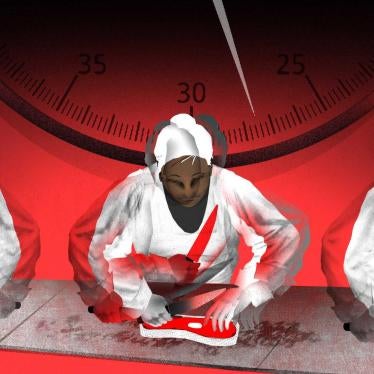

Training on photos of real children enables AI models to create convincing clones of any child. The images we found in LAION’s dataset captured the faces and bodies of 362 Australian children and 358 Brazilian children without their consent and were used to train AI models used to create sexually explicit deepfakes of other children.

LAION confirmed our findings that some children’s photos were accompanied by sensitive information, which we reported made the children’s identities easily traceable. Human Rights Watch has confirmed the removal of the children’s photos identified to LAION from its newly released dataset.

LAION’s removal of these images is a positive step. It proves it is possible to remove children’s personal data from AI training datasets. It also acknowledges the gravity of the harm inflicted on children when their personal data is used to harm them and others in ways that are impossible to anticipate or guard against, due to the nature of AI systems.

But serious concerns remain. We reviewed only a tiny fraction – less than 0.0001 percent – of LAION’s dataset, and there are likely many more identifiable children whose photos remain in the dataset. AI models that were trained on the earlier dataset cannot forget the now removed images. Moreover, Human Rights Watch was only able to conduct this research because LAION’s dataset is open source; datasets that are privately built and owned by AI companies continue to go unexamined.

This is why governments should pass laws that would protect all children’s privacy through their data.

This month, the Australian government will announce whether it will honor its commitment to introduce the country’s first such law, a Children’s Privacy Code. And Brazil’s Senate will soon deliberate on a proposed law that protects children’s rights online, including their data privacy.

These are rare opportunities to meaningfully protect children. Lawmakers should do so.