The next revolution in warfare threatens to undermine fundamental principles of morality and law. Fully autonomous weapons, already under development in a number of countries, would have the power to select targets and fire on them without meaningful human control. In so doing, they would violate basic humanity and the public conscience.

International humanitarian law obliges countries to take these factors into account when evaluating new weapons. A longstanding provision known as the Martens Clause creates a legal duty to consider the moral implications of emerging technology. The Martens Clause states that when no existing treaty provision specifically applies, weapons should comply with the “principles of humanity” and the “dictates of public conscience”.

A new report from Human Rights Watch and Harvard Law School’s International Human Rights Clinic, of which I was the lead author, shows why fully autonomous weapons would fail both prongs of the test laid out in the Martens Clause. We conclude that the only adequate solution for dealing with these potential weapons is a preemptive ban on their development, production, and use.

More than 70 countries will convene at the United Nations in Geneva from August 27 to 31 to discuss what they refer to as lethal autonomous weapons systems. They will meet under the auspices of the Convention on Conventional Weapons, a major disarmament treaty. To avert a crisis of morality and a legal vacuum, countries should agree to start negotiating a treaty prohibiting these weapons in 2019.

With the rapid development of autonomous technology, the prospect of fully autonomous weapons is no longer a matter of science fiction. Experts have warned they could be fielded in years not decades.

While opponents of fully autonomous weapons have highlighted a host of legal, accountability, security, and technological concerns, morality has been a dominant theme in international discussions since they began in 2013. At a UN Human Rights Council meeting that year, the UN expert on extrajudicial killing warned that humans should not delegate lethal decisions to machines that lack “morality and mortality.”

The inclusion of the Martens Clause in the Convention on Conventional Weapons underscores the need to consider morality in that forum, too.

Fully autonomous weapons would violate the principles of humanity because they could not respect human life and dignity. They would lack both compassion, which serves as a check on killing, and human judgment, which allows people to assess unforeseen situations and make decisions about how best to protect civilians. Fully autonomous weapons would make life-and-death decisions based on algorithms that objectify their human targets without bringing to bear an understanding of the value of human life based on lived experience.

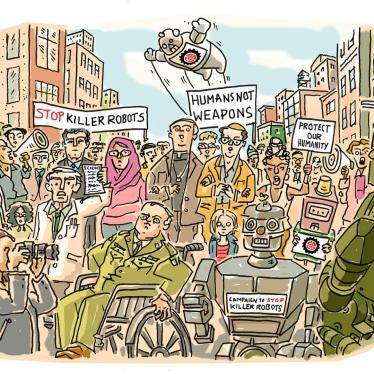

Fully autonomous weapons also run counter to the dictates of public conscience, which reflect an understanding of what is right and wrong. Traditional voices of conscience, including more than 160 religious leaders and more than 20 Nobel Peace Laureates, have publicly condemned fully autonomous weapons. Scientists, technology companies, and other experts by the thousands have joined the chorus of objectors. In July, a group of artificial intelligence researchers released a pledge, since signed by more than 3,000 people and 237 organisations, not to assist with the development of such weapons.

Governments have also expressed widespread concern about the prospect of losing control over the use of force. In April, for example, the African Group, one of the five regional groups at the United Nations, called for a preemptive ban on fully autonomous weapons, stating, “it is inhumane, abhorrent, repugnant, and against public conscience for humans to give up control to machines, allowing machines to decide who lives or dies.”

To date, 26 countries have endorsed the call for a prohibition on fully autonomous weapons. Dozens more have emphasised the need to maintain human control over the use of force. Their shared commitment to human control provides common ground on which to initiate negotiations for a new treaty on fully autonomous weapons.

Countries debating fully autonomous weapons at the United Nations next week must urgently heed the principles reflected in the Martens Clause. Countries should both reiterate their concerns about morality and law and act on them before this potential revolution in weaponry becomes a reality.