Human Rights Watch calls on the FTC to take a human rights-based approach in its recommendations around commercial data regulation, in order to protect all consumers. The FTC should also take a broad approach to the definition of data, given the amount of revealing sensitive information that can be obtained or inferred through third-party tracking. It should regulate the private sector’s treatment of personal data with clear standards and limitations around the collection and use of data by both state and private industry actors, including special provisions for data linked to children.

November 21, 2022

The Honorable April Tabor

Federal Trade Commission

Office of the Secretary

600 Pennsylvania Avenue NW, Suite CC-5610 (Annex B)

Washington, DC 20580

Submitted via Federal eRulemaking Portal: www.regulations.gov

Re: Commercial Surveillance ANPR, R111004

Dear Secretary Tabor:

Human Rights Watch offers the following comments in response to the Federal Trade Commission’s advance notice of proposed rulemaking and its request for public comment on the prevalence of commercial surveillance and data security practices that harm consumers, published in the Federal Register on August 22, 2022.[1]

Human Rights Watch has long advocated for the governments to take action to protect consumers against business practices that undermine human rights.[2] All commercial surveillance and data security practices should be grounded in human rights standards, centered on enabling people to live a dignified life. The responsibility of companies to respect human rights is a widely recognized standard of expected corporate conduct,set out in the United Nations Guiding Principles on Business and Human Rights and other international human rights standards.[3] Companies’ responsibilities include preventing their services from being used in ways that cause or contribute to violations of human rights, even if they were not directly involved in perpetrating abuses. Governments are responsible for ensuring that businesses meet these responsibilities and are responsible for violations of consumers’ human rights if they fail to take necessary, appropriate, and reasonable measures to prevent and remedy such violations or otherwise tolerate or contribute to these violations.

This submission focuses on the data collection practices of technology companies that undermine human rights. The practices or capabilities of technology companies implicate a range of human rights norms. Human Rights Watch advocates that the FTC take a broad approach to the definition of data, similar to the definition of “personal data” and “special category data” in the European Union’s General Data Protection Regulation (GDPR).[4] The FTC should adopt comprehensive data protection regulations that center human rights protections as the only way to protect all consumers. It should regulate the private sector’s treatment of personal data with clear standards and should limit both state and industry collection and use of people’s data to safeguard rights. The FTC’s response should go beyond consent-based regulation because human rights are a minimum standard that cannot be waived by consent, even if all potential uses of data could be foreseen.

Our submission does not address all questions in the notice and, because it groups related questions by the broad topics they address, it does not necessarily address questions in the order presented in the notice.

Potentially harmful practices

Question 1. Which practices do companies use to surveil consumers?

Technology companies can collect data about consumers through the services they provide either by doing so themselves (“first-party tracking”), or by utilizing tracking technologies built by third-party companies (“third-party tracking”), through which users’ data are collected and sent to these entities for further use.[5] Regardless of the purpose for which data is being collected, third-party tracking means data about consumers is being shared with companies that consumers may not have a direct relationship with. As a result, consumers lack transparency about the kinds of data that is collected about them – and how that data is further processed and shared.

Third parties whose tracking code is embedded in a large number of services are able to gather and combine data about consumers from millions of different sources. The data collected through third-party tracking is both vast and granular: which website URLs a consumer has opened, where their cursor lingered on a website, their location, and their movements on an app. It is important to note that data collected through third-party tracking can be incredibly invasive. In 2018, for instance, a gay online dating app was found to share people’s HIV status with two analytics companies.[6] When mental health apps or reproductive and menstrual health apps embed third-party trackers, they risk sharing sensitive data about people’s mental, sexual, or reproductive health.

Whether data is collected through first-party of third-party tracking, the ecosystem for commercial data has become de facto inescapable for consumers. Websites and apps can each contain hundreds of third-party trackers, including popular third-party tracking code from large technology companies like Alphabet, Meta, Microsoft and Amazon. As a result, large tech companies have a particularly granular insight into consumer behavior across the web, especially as they can combine this data with vast troves of first-party data they collect directly from customers.

Ultimately, the average consumer often consents to their data being collected and processed without a true understanding of the complexities of how that data will be used.[7] The human rights implications for this are significant, posing a threat to rights to the rights to privacy, non-discrimination and equality, freedom of expression and opinion, effective remedy and redress – and many more.

Question 11. Which, if any, commercial incentives and business models lead to lax data security measures or harmful commercial surveillance practices? Are some commercial incentives and business models more likely to protect consumers than others? On which checks, if any, do companies rely to ensure that they do not cause harm to consumers?

The premise underlying the online advertising ecosystem is that everything people say or do online can be captured, used to profile, and mined to maximize attention and engagement on platforms while selling targeted advertisements. This business model relies on pervasive tracking and profiling of users that intrudes on their privacy and feeds algorithms that promote and amplify divisive and sensationalist content. Studies show that such content earns more engagement and, in turn, increased profits for companies. The pervasive surveillance upon which this model is built is fundamentally incompatible with human rights.

The troves of data routinely collected by tech companies amounts to more than simply a threat to the right to privacy, however.

In the absence of strong and comprehensive federal data protection laws, it is easy for any actor, including companies, scammers, government agencies, political action committees, armed extremists, or abusive ex-partners to purchase highly sensitive data about individuals, including buying data revealing or suggesting people’s medical history, religious beliefs,[8] or sexual orientation[9]. That exposes consumers – everyone from activists to politicians – to security threats, including blackmail. In 2017, for instance, Amnesty International showed that it was possible to purchase data on 1,845,071 people listed as Muslim in the United States for $138,380.[10] The same year, journalists were able to purchase the web browning data of three million consumers by creating a fake marketing company.[11] They were able to de-anonymize many users, including judges, and politicians, revealing an intimate picture of their internet browsing habits.

In addition, the digital advertising ecosystem is so complex that it has become incredibly difficult – if not impossible – for consumers (and public interest researchers and journalists) to fully understand where data that companies collect about them ends up, or how it is used. These opaque systems present challenges to a person's right to access effective remedy and redress, or to take steps to protect themselves against potential rights violations. Without restrictions on the length of time that companies may store personal data, the threats outlined above effectively hang over everyone in perpetuity.

Question 12. Lax data security measures and harmful commercial surveillance injure different kinds of consumers (e.g., young people, workers, franchisees, small businesses, women, victims of stalking or domestic violence, racial minorities, the elderly) in different sectors (e.g., health, finance, employment) or in different segments or “stacks” of the internet economy. For example, harms arising from data security breaches in finance or healthcare may be different from those concerning discriminatory advertising on social media which may be different from those involving education technology. How, if at all, should potential new trade regulation rules address harms to different consumers across different sectors? Which commercial surveillance practices, if any, are unlawful such that new trade regulation rules should set out clear limitations or prohibitions on them? To what extent, if any, is a comprehensive regulatory approach better than a sectoral one for any given harm?

A comprehensive, rather than a sectoral approach, is the only way to protect different kinds of consumers. All personal data should be granted legal protections; once information has been collected in one sector, it can be used to make sensitive inferences about a person in another sector or application.

Of course, harms arising from data security breaches in finance or healthcare may be different from those concerning discriminatory advertising on social media. In practice, however, it has become virtually impossible to clearly delineate between consumers in different sectors. For example, medical data is no longer contained solely within the domain of traditional healthcare settings, as consumers increasingly turn to technology services for information about their health and potential treatment. When consumers research information about their health conditions on websites or apps, or ask a voice assistant about their symptoms, this data may be collected, shared, combined, and further analyzed by both companies and state agencies to reveal intimate details about their health history and status – with potential repercussions for their right to access healthcare and right to non-discrimination and equality, should that information be utilized in decisions around whether to grant or deny access to services. When consumers use an app to track their period or their mood, the app (and all third-party advertising trackers embedded in the app) receive data that reveals an intimate picture of a consumer’s health, even though the data is collected outside a healthcare setting.

Specific privacy considerations for children, including teenagers

Section (b): To what extent do commercial surveillance practices or lax data security measures harm children, including teenagers?

18. To what extent should trade regulation rules distinguish between different age groups among children (e.g., 13 to 15, 16 to 17, etc.)?

US legislation on age of majority is generally consistent with international standards. Under the UN Convention on the Rights of the Child, a child is defined as every human being below the age of 18.[12] Similarly, in the United States, most states have set the age of majority at 18, with the exception of three states that have set a higher age of majority.[13]

Privacy is a human right, and all children are entitled to special protections that guard their privacy and the space for them to grow, play, and learn.[14]

Children may require different protections at different times, in different contexts and by different service providers.[15] As children’s capacities and needs evolve throughout their childhood, they use and experience online environments differently according to their context and their individual capabilities.

Age is only one factor by which a child may be particularly vulnerable to violations of their data privacy. For example, older children may require more protection, not less, as they may be accessing a broader range of services than younger children. For children who are survivors of abuse, ensuring the privacy of their personal data means the freedom to live safely, without exposing where they live, play, and go to school. For lesbian, gay, bisexual, and transgender children, privacy protections would enable them to safely seek health and life-saving information.

Children’s privacy is vital to ensuring their safety, agency, and dignity at all ages. If the FTC intends to ensure that companies fully protect children in complex digital environments, any proposed rules should seek to protect the privacy of all children, irrespective of age.

Question 13. The Commission here invites comment on commercial surveillance practices or lax data security measures that affect children, including teenagers. Are there practices or measures to which children or teenagers are particularly vulnerable or susceptible? For instance, are children and teenagers more likely than adults to be manipulated by practices designed to encourage the sharing of personal information?

and

Question 21. Should companies limit their uses of the information that they collect to the specific services for which children and teenagers or their parents sign up? Should new rules set out clear limits on personalized advertising to children and teenagers irrespective of parental consent? If so, on what basis? What harms stem from personalized advertising to children? What, if any, are the prevalent unfair or deceptive practices that result from personalized advertising to children and teenagers?

Profiling and targeting children on the basis of their actual or inferred characteristics not only infringes on their privacy, but also risks abusing or violating their other rights, particularly when this information is used to anticipate and guide them toward outcomes that are harmful or not in their best interest.[16]

Such practices also play an enormous role in shaping children’s online experiences and determining the information they see, which can influence, shape, or modify children’s opinions and thoughts in ways that exploit their lack of understanding, affect their ability to make autonomous choices, and limit their opportunities or development. Such practices may also have adverse consequences that continue to affect children at later stages of their lives.[17]

Persistent Identifiers

To figure out who people are on the internet, advertising technology (AdTech) companies tag each person with a string of numbers and letters that acts as an identifier number that is persistent and unique: it points to a single child or their device, and it does not change.

Persistent identifiers enable AdTech companies to infer the interests and characteristics of a single child or person. Every time a child connects to the internet and comes into contact with tracking technology, any information collected about that child—where they live, who their friends are, what kind of device their family can afford for them—is tied back to the identifier associated with them by that AdTech company, resulting in a comprehensive profile over time. Data tied together in this way do not need a real name to be able to target a real child or person.

In addition, computers can correctly re-identify virtually any person from an anonymized dataset, using just a few random pieces of anonymous information.[18] Given the risks of re-identification, the Children’s Online Privacy Protection Act (COPPA) recognizes persistent identifiers as personal information, granting them the same considerations and legal protections.[19]

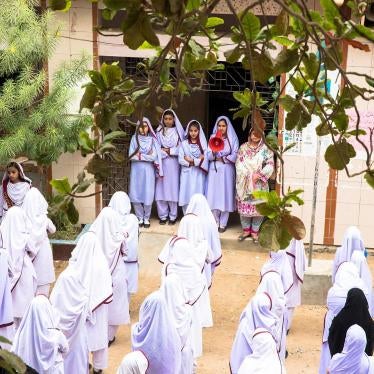

In May 2022, Human Rights Watch published a global investigation on the education technology (EdTech) endorsed by 49 governments of the world’s most populous countries for children’s education during the Covid-19 pandemic.[20] Over half (56 percent) of the online learning apps used by children around the world, including in the United States, could or did tag children and their devices with a persistent identifier designed solely to be used for advertising. This enabled these platforms to uniquely identify children and piece together the vast amounts of data they collected from them to guess at a child’s identity, location, interests, emotions, health, and relationships, and use these inferences to predict what a child might do next, or how they might be influenced, for commercial purposes and irrespective of the best interest of the child.

Behavioral Advertising

Children are particularly susceptible to advertising, due to their still-developing cognitive abilities and impulse inhibition. Research on children’s cognitive development in relation to television commercials has demonstrated that younger children, particularly those under 7 years old, cannot identify ads or understand their persuasive intent; children at 12 years and older begin to distinguish between organic content and advertisements, though this does not translate into their ability to resist marketing.[21] On the internet, much like adults, many older children and teenagers struggle with understanding the opaque supply chain of commercial activity in which their personal data are valued, traded, and used.[22]

Children are at even greater risk of manipulation by behavioral advertising online.[23] When children’s data are collected for advertising, sophisticated algorithms extract and analyze overwhelming amounts of children’s personal data for the purpose of tailoring ads accurately. These ads are embedded in personalized digital platforms that further blur the distinctions between organic and paid content. In doing so, behavioral advertising capitalizes on children’s inabilities to identify or critically think about persuasive intent, potentially manipulating them toward outcomes that may not be in their best interest.[24]

The Committee on the Rights of the Child has stated that countries “should prohibit by law the profiling or targeting of children of any age for commercial purposes on the basis of a digital record of their actual or inferred characteristics, including group or collective data, targeting by association or affinity profiling.”[25] In a statement issued to pediatric health care providers, industry, and policy makers, the American Academy of Pediatrics raised concerns “about the practice of tracking and using children’s digital behavior to inform targeted marketing campaigns, which may contribute to health disparities among vulnerable children or populations.”[26]

The United States and abroad

In its investigation, Human Rights Watch examined 9 EdTech products recommended by the California and Texas Departments of Education. All 9 products were found to have surveilled children or capable of doing so; 8 products sent or granted access to children’s personal data to AdTech companies that specialize in behavioral advertising, or whose algorithms determine what children see online.[27]

How the FTC chooses to protect children in the United States will have implications for children globally. Many of the world’s most widely used EdTech products are made by American companies. Human Rights Watch found that 159 of the 163 online learning products (97.5%) used by children around the world sent, or had the capability to send, children’s personal data to at least one AdTech company headquartered in the United States.

On consent

Children and their guardians are generally denied the knowledge or opportunity to challenge these practices. Human Rights Watch found that, of the 290 companies it investigated, most did not disclose their surveillance of children and their data. As these tracking technologies were invisible to the user, children had no reasonably practical way of knowing the existence and extent of these data practices, much less the impacts on their rights. By withholding critical information, these companies also impeded children’s access to justice and remedy.

Even if children were aware of being surveilled in their virtual classrooms, they could not meaningfully opt out or refuse to provide their personal data to companies, without being excluded from their education.

Broadly, and irrespective of consent, commercial interests and behavioral advertising should not be considered legitimate grounds of data processing that override a child’s best interests or their fundamental rights.

Human Rights Watch calls on the Federal Trade Commission to:

- Ban the profiling of children, including through the use of persistent identifiers designed for advertising.

- Ban behavioral advertising to children, irrespective of consent.

Question 20. How extensive is the business-to-business market for children and teens' data? In this vein, should new trade regulation rules set out clear limits on transferring, sharing, or monetizing children and teens' personal information?

Human Rights Watch found that the data practices of an overwhelming majority of EdTech companies and their products risked or infringed on children’s rights.[28] As outlined in the United Nations Guiding Principles on Business and Human Rights, companies are responsible for preventing and mitigating abuses of children’s rights, including those they indirectly contribute to through their business relationships.

Out of 94 EdTech companies, 87 (93 percent) directly sent or had the capacity to grant access to children’s personal data to 199 companies, overwhelmingly AdTech. In many cases, this enabled the commercial exploitation of children’s personal data by third parties, including AdTech companies and advertisers, and put children’s rights at risk or directly infringed upon them.

Notably, 79 of these companies built and offered educational products designed specifically for children's use. In each of these products apparently designed for use by children, the EdTech company implemented tracking technologies to collect and to allow AdTech companies to collect personal data from children.

Of the 74 AdTech companies that responded to Human Rights Watch’s request for comment, an overwhelming majority did not state that they had operational procedures in place to prevent the ingestion or processing of children’s data, or to verify that the data they did receive comply with their own policies and applicable child data protection laws. Absent effective protections, AdTech companies appear to routinely ingest and use children’s data in the same way they do adults’ data.

COPPA does not restrict companies from collecting, using, and sharing children’s data for purposes not in the best interest of the child, including commercial interests and behavioral advertising.[29] This domestic law has impacted children’s digital experiences worldwide due to the fact that many of the largest and most influential technology companies that provide global services—including the majority of AdTech companies covered in Human Rights Watch’s report—are headquartered in the US.

As a result, technology companies have faced little regulatory pressure or incentive to prioritize the safety and privacy of children in the design of their services. Most online service providers do not offer specific, age-appropriate data protections to children, and instead treat their child users as if they were adults.

Human Rights Watch calls on the Federal Trade Commission to:

- Ban the generation, collection, processing, use, and sharing of children’s data for advertising.

- Require companies to detect and prevent the commercial use of children’s data collected by tracking technologies.

- For companies collecting children’s data, require them to:

- Stop sharing children’s data with third parties for purposes that are unnecessary, disproportionate, and not in the best interest of the child. In instances where children’s data are disclosed to a third party for a legitimate purpose, in line with child rights principles and data protection laws, enter into explicit contracts with third-party data processors, and apply strict limits to their processing, use, and retention of the data they receive.

- Apply child flags[30] to any data shared with third parties, to ensure that adequate notice is provided to all companies in the technology stack that they are receiving children’s personal data, and thus obliged to apply enhanced protections in their processing of this data.

- For AdTech and other third-party companies receiving children’s data, require them to:

- Regularly audit incoming data and the companies sending them. Delete or otherwise disable the commercial use of any received children’s data or user data received from child-directed services, when detected.

- Notify and require companies that use AdTech tracking technologies to declare children’s data collected through these tools with a child flag or through other means, so that tagged data can be automatically flagged and deleted before transmission to third-party companies.

- For companies collecting children’s data, require them to:

Appropriate areas for regulation

Question 10. Which kinds of data should be subject to a potential trade regulation rule? Should it be limited to, for example, personally identifiable data, sensitive data, data about protected categories and their proxies, data that is linkable to a device, or non-aggregated data? Or should a potential rule be agnostic about kinds of data?

We strongly recommend that the FTC take a broad approach to the definition of data, similar to the definition of “personal data” and “special category data” in the European Union’s General Data Protection Regulation (GDPR).

Article 4 of the GDPR defines personal data as follows:

“Personal data” means any information relating to an identified or identifiable natural person (‘data subject’); an identifiable natural person is one who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person.

This definition encompasses online and device identifiers (e.g., IP addresses, cookies, or device identifiers), location data, usernames, and pseudonymous data.[31]

Any potential trade regulation rules should also be extended to data that is derived, inferred, and predicted from other data.

Article 9 of the GDPR defined special category data as follows:

[D]ata revealing racial or ethnic origin, political opinions, religious or philosophical beliefs, or trade union membership, and the processing of genetic data, biometric data for the purpose of uniquely identifying a natural person, data concerning health or data concerning a natural person’s sex life or sexual orientation.

There are several reasons for a broad, rights-based approach to data regulation:

Personally Identifiable Information is limited to information that can directly identify a consumer, such as information linked to their name, their telephone number, or their email address. Limiting protections to such data would exclude most data routinely collected and shared by companies, namely data that can be used to indirectly identify consumers. For example, data linked to devices like mobile phones, such as unique device identifiers or unique advertising identifiers, is routinely used in the advertising technology industry to track consumers across different devices, apps, and websites.

At the same time, technical advances, particularly in machine learning, have provided novel ways to identify consumers indirectly. Browser fingerprinting, for instance, allows companies to track consumers without relying on tracking technologies like cookies, allowing covert tracking of consumers without their knowledge.

Advertising Technology (AdTech) companies can combine data from various sources to create detailed profiles of consumers. They can also derive, infer and predict even more granular information about consumers from secondary data, allowing for sensitive insights from seemingly mundane sources of data. Health data, for instance, can be derived or inferred from someone’s location data, if their location data reveals frequent visits to a healthcare provider. Similarly, someone visiting a website with resources about depression, or researching the side effects of medicine that’s commonly used to treat depression, could be profiled as a consumer with an interest in depression. A woman who uses a period tracking app and has not tracked a period in more than 40 days, has searched for “abortion” on the internet, and has visited the website of an abortion clinic may be profiled as seeking an abortion. Geolocation data could demonstrate that the same woman who stopped tracking her period and visited a website for abortion also visited an abortion clinic.

As a result, advertising technology companies and data brokers can create granular profiles of individual consumers that contain their real-time location, relationships, health interests, fears, and desires.

Question 26. To what extent would any given new trade regulation rule on data security or commercial surveillance impede or enhance innovation? To what extent would such rules enhance or impede the development of certain kinds of products, services, and applications over others?

As the UN Special Rapporteur on the Right to Privacy has aptly observed, “Privacy enables the full development of the person, while protecting against harms that stunt human development, innovation and creativity, such as violence, discrimination and the loss of the freedoms of expression, association and peaceful assembly.”[32] As a corollary , “[l]osses of privacy weaken the universality of human rights and corrode societies and democracy.”[33]

Just as guardrails and good traffic rules ensure a safe traffic flow, good data protection rules ensure that technological companies foster innovation and creativity in ways that are consistent with human rights.

Comprehensive data protection laws are essential for protecting human rights – most obviously, the right to privacy, but also many related freedoms that depend on our ability to make choices about how and with whom we share information about ourselves.

Question 43. To what extent, if at all, should new trade regulation rules impose limitations on companies' collection, use, and retention of consumer data? Should they, for example, institute data minimization requirements or purpose limitations, i.e., limit companies from collecting, retaining, using, or transferring consumer data beyond a certain predefined point? Or, similarly, should they require companies to collect, retain, use, or transfer consumer data only to the extent necessary to deliver the specific service that a given individual consumer explicitly seeks or those that are compatible with that specific service? If so, how? How should it determine or define which uses are compatible? How, moreover, could the Commission discern which data are relevant to achieving certain purposes and no more?

All states should adopt comprehensive data protection laws that center human rights protections. Governments should regulate the private sector’s treatment of personal data with clear laws, and limit both state and industry collection and use of people’s data to safeguard rights.[34] This should go beyond consent-based regulation because human rights are a minimum standard that cannot be waived by consent, even if all potential uses of data could be foreseen. Ultimately, the digital society may require many more substantive protections than a consent-based model can provide.

* * *

Thank you for the opportunity to submit comments on this proposed rulemaking. Please do not hesitate to contact us at kalthef@hrw.org if you have any questions.

Sincerely,

Frederike Kaltheuner

Director

Technology and Rights Division

Human Rights Watch

[1] Trade Regulation Rule on Commercial Surveillance and Data Security, 87 Fed. Reg. 51,273 (Aug. 22, 2022).

[2] See Economic Rights and Justice, Human Rights Watch, https://www.hrw.org/topic/economic-justice-and-rights (accessed November 18, 2022).

[3] See, e.g., UN Office of the High Comm’r for Human Rights, Guiding Principles on Business and Human Rights: Implementing the United Nations "Protect, Respect and Remedy" Framework, U.N. Doc. HR/PUB/11/04 (2011)., https://digitallibrary.un.org/record/720245?ln=en.

[4] Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC, arts. 4(1), 9, OJ L 119/1 (May 4, 2016), https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016R0679 [hereinafter General Data Protection Regulation or GDPR].

[5] First party tracking: Companies can collect and use data for numerous reasons, including to deliver a service, or to generate revenue. Third party tracking: Companies integrate third party trackers into their website, apps or services for a variety of reasons, including to better understand how consumers use their website, or to display targeted advertising.

[6] Julia Belluz, Grindr Is Revealing Its Users’ HIV Status to Third-Party Companies, Vox, Apr. 3, 2018, https://www.vox.com/2018/4/2/17189078/grindr-hiv-status-data-sharing-privacy.

[7] See, e.g., Kevin Litman-Navarro, We Read 150 Privacy Policies. They Were an Incomprehensible Disaster, N.Y. Times, June 12, 2019, https://www.nytimes.com/interactive/2019/06/12/opinion/facebook-google-privacy-policies.html.

[8] Joseph Cox, How the US Military Buys Location Data from Ordinary Apps, Vice, Nov. 16, 2020,

https://www.vice.com/en/article/jgqm5x/us-military-location-data-xmode-locate-x.

[9] Sara Morrison, This Outed Priest’s Story Is a Warning for Everyone About the Need for Data Privacy Laws, Vox, July 21, 2021, https://www.vox.com/recode/22587248/grindr-app-location-data-outed-priest-jeffrey-burrill-pillar-data-harvesting.

[10] “Muslim Registries,” Big Data and Human Rights, Amnesty Int’l (Feb. 27, 2017),

https://www.amnesty.org/en/latest/research/2017/02/muslim-registries-big-data-and-human-rights/.

[11] Alex Hern. “Anonymous” Browsing Data Can Be Easily Exposed, Researchers Reveal, Guardian, Aug. 1, 2017, https://www.theguardian.com/technology/2017/aug/01/data-browsing-habits-brokers.

[12] Convention on the Rights of the Child, art. 1, Nov. 20, 1989, 1577 U.N.T.S. 3 (entered into force September 2, 1990). The United States signed the Convention on the Rights of the Child in 1995 but has not ratified it.

[13] Alabama and Nebraska have set their age of majority at 19; Mississippi has set its age of majority at 21. Ala. Code § 26-1-1; Neb. Rev. Stat. § 43-2101; Miss. Code tit. 1, § 1-3-27.

[14] In his 2021 report to the UN Human Rights Council, the Special Rapporteur on the right to privacy stated that children’s right to privacy “enables their access to their other rights critical to developing personality and personhood, such as the rights to freedom of expression and of association and the right to health, among others.” See UN Human Rights Council, Rep. of the Special Rapporteur on the Right to Privacy, ¶¶ 67-76, U.N. Doc. A/HRC/46/37 (Jan. 25, 2021). See also International Covenant on Civil and Political Rights (ICCPR), art. 17, December 16, 1966, 999 U.N.T.S. 171 (entered into force March 23, 1976; ratified by United States June 8, 1992); Convention on the Rights of the Child, art. 16; Convention on the Rights of Persons with Disabilities (CRPD), art. 22, December 13, 2006, 2515 U.N.T.S. 3 (entered into force May 3, 2008). The United States signed the CRPD in 2009 but has not ratified it.

[15] United Nations Committee on the Rights of the Child (CRC), General Comment No. 25 on Children’s Rights in Relation to the Digital Environment, ¶¶ 19-21, U.N. Doc. CRC/C/GC/25 (Mar. 2, 2021).

[16] Human Rights Watch, “How Dare They Peep into My Private Life?”: Children’s Rights Violations by Governments that Endorsed Online Learning During the Covid-19 Pandemic (2022), https://www.hrw.org/report/2022/05/25/how-dare-they-peep-my-private-life/childrens-rights-violations-governments.

[17] Council of Europe, Algorithms and Human Rights: Study on the Human Rights Dimensions of Automated Data Processing Techniques and Possible Regulatory Implications, DGI (2017)12, March 2018, at 15-16, https://rm.coe.int/algorithms-and-human-rights-en-rev/16807956b5.

[18] Computer scientists have established that personal information cannot be protected by current methods of “anonymizing” data. Advertisers, data brokers, and others have long shared and sold people’s personal information without violating privacy laws, under the claim that they anonymize this data by stripping people’s real names out. However, computer algorithms can correctly re-identify, for example, 99.98 percent of people from almost any anonymized data set with just 15 data points, such as gender, ZIP code, or marital status. Similarly, knowing just four random pieces of information from an anonymized dataset is enough to re-identify shoppers as unique individuals and uncover the rest of their credit card records, or to uniquely identify people from four locations they were previously at. See Luc Rocher, Julien M. Hendrickx & Yves-Alexandre de Montjoye, Estimating the Success of Re-Identifications in Incomplete Datasets Using Generative Models, 10 Nature Communications (July 23, 2019), doi: 10.1038/s41467-019-10933-3; Yves-Alexandre de Montjoye et al., Unique in the Shopping Mall: On the Reidentifiability of Credit Card Metadata, 347 Science 536-39 (2015), doi:10.1126/science.1256297; Yves-Alexandre de Montjoye et al., Unique in the Crowd: The Privacy Bounds of Human Mobility, 3 Nature Sci. Repts. 1376 (2013), doi:10.1038/srep01376.

[19] Complying with COPPA: Frequently Asked Questions, art. A(3), US Fed. Trade Comm’n (July 2020, https://www.ftc.gov/tips-advice/business-center/guidance/complying-coppa-frequently-asked-questions-0.

[20] Human Rights Watch, “How Dare They Peep into My Private Life?”

[21] Deborah Roedder John, Consumer Socialization of Children: A Retrospective Look at Twenty-Five Years of Research, 26 J.Consumer Res. 183 (1999), doi: 10.1086/209559; Brian L. Wilcox et al., Report of the APA Task Force on Advertising And Children, Am. Psychological Assoc. (Feb. 20, 2004), https://www.apa.org/pi/families/resources/advertising-children.pdf.

[22] Sonia Livingstone, Mariya Stoilova & Rishita Nandagiri, London Sch. of Econ. & Pol. Sci., Children’s Data and Privacy Online: Growing Up In a Digital Age. An Evidence Review 15 (2019), http://eprints.lse.ac.uk/101283/1/Livingstone_childrens_data_and_privacy_online_evidence_review_published.pdf; Jenny Radesky et al., American Academy of Pediatrics Policy Statement: Digital Advertising to Children, 146 Pediatrics e20201681 (2020), doi: 10.1542/peds.2020-1681.

[23] Livingstone, Stoilova, and Nandagiri, Children’s Data and Privacy Online, at 15; Radesky et al., American Academy of Pediatrics Policy Statement: Digital Advertising to Children; Eva A. van Reijmersdal et al., Processes and Effects of Targeted Online Advertising Among Children, 36 Int’l J. Advertising 396 (2017), doi: 10.1080/02650487.2016.1196904.

[24] One study of 231 Dutch children aged 9-13 years found that children process behavioral advertising in fundamentally different ways than adults do. Children processed behavioral ads non-critically and did not seem to understand the targeting tactic or think that profile-targeted ads were more relevant to them. At the same time, seeing behavioral ads that were targeted at their interests and hobbies proved to be effective in creating positive associations toward the brand and increased children’s intention to buy the products. See van Reijmersdal et al., Processes and Effects of Targeted Online Advertising Among Children, at 396-414.

[25] Committee on the Rights of the Child, General Comment No. 25, ¶ 42.

[26] Radesky et al., American Academy of Pediatrics Policy Statement: Digital Advertising to Children.

[27] Detailed information and technical evidence can be found at Human Rights Watch, #StudentsNotProducts, https://www.hrw.org/StudentsNotProducts#explore.

[28] Human Rights Watch, “How Dare They Peep into My Private Life?”: Children’s Rights Violations by Governments that Endorsed Online Learning During the Covid-19 Pandemic (New York: Human Rights Watch, May 25, 2022), https://www.hrw.org/report/2022/05/25/how-dare-they-peep-my-private-life/childrens-rights-violations-governments

[29] Children’s Online Privacy Protection Act, 15 U.S.C. §§ 6501-6506.

[30] These are tags that can be applied to data or to data transmissions to mark data to be treated as child-directed, for higher protections.

[31] The EU General Data Protection Regulation: Questions and Answers, Human Rights Watch (June 6, 2018), https://www.hrw.org/news/2018/06/06/eu-general-data-protection-regulation.

[32] UN Human Rights Council, Rep. of the Special Rapporteur on the Right to Privacy, ¶ 16, U.N. Doc. A/HRC/43/52 (Mar. 24, 2020), https://documents-dds-ny.un.org/doc/UNDOC/GEN/G20/071/66/PDF/G2007166.pdf?OpenElement.

[33] Id. ¶ 19(b).

[34] The EU General Data Protection Regulation: Questions and Answers, Human Rights Watch (June 6, 2018), https://www.hrw.org/news/2018/06/06/eu-general-data-protection-regulation.