Summary

On school days, 9-year-old Rodin wakes up every morning at 8 a.m. in Istanbul, Turkey. He eats a bowl of chocolate cereal for breakfast; his mother reminds him, as she always does, to brush his teeth afterwards. By 9 a.m., he logs into class and waves hello to his teacher and to his classmates. He hopes that no one can tell that he’s a little sleepy, or that he’s behind on his homework.

During breaks between classes, Rodin reads chat messages from his classmates and idly doodles on the virtual whiteboard that his teacher leaves open. He watches his best friend draw a cat; he thinks his friend is much better at drawing than he is. Later in the afternoon, Rodin opens up a website to watch the nationally televised math class for that day. At the end of each day, he posts a picture of his homework to his teacher’s social media page.

Unbeknownst to him, an invisible swarm of tracking technologies surveil Rodin’s online interactions throughout his day. Within milliseconds of Rodin logging into class in the morning, his school’s online learning platform begins tracking Rodin’s physical location—at home in his family’s living room, where he has spent most of his days during the pandemic lockdown. The virtual whiteboard passes along information about his doodling habits to advertising technology (AdTech) and other companies; when Rodin’s math class is over, trackers follow him outside of his virtual classroom and to the different apps and sites he visits across the internet. The social media platform Rodin uses to post his homework silently accesses his phone’s contact list and downloads personal details about his family and friends. Sophisticated algorithms review this trove of data, enough to piece together an intimate portrait of Rodin in order to figure out how he might be easily influenced.

Neither Rodin nor his mother were aware that this was going on. They were only told by his teacher that he had to use these platforms every day to be marked as attending school during the Covid-19 pandemic.[1]

This report is a global investigation of the education technology (EdTech) endorsed by 49 governments for children’s education during the pandemic. Based on technical and policy analysis of 163 EdTech products, Human Rights Watch finds that governments’ endorsements of the majority of these online learning platforms put at risk or directly violated children’s privacy and other children’s rights, for purposes unrelated to their education.

The coronavirus pandemic upended the lives and learning of children around the world. Most countries pivoted to some form of online learning, replacing physical classrooms with EdTech websites and apps; this helped fill urgent gaps in delivering some form of education to many children.

But in their rush to connect children to virtual classrooms, few governments checked whether the EdTech they were rapidly endorsing or procuring for schools were safe for children. As a result, children whose families were able to afford access to the internet and connected devices, or who made hard sacrifices in order to do so, were exposed to the privacy practices of the EdTech products they were told or required to use during Covid-19 school closures.

Human Rights Watch conducted its technical analysis of the products between March and August 2021, and subsequently verified its findings as detailed in the methodology section. Each analysis essentially took a snapshot of the prevalence and frequency of tracking technologies embedded in each product on a given date in that window. That prevalence and frequency may fluctuate over time based on multiple factors, meaning that an analysis conducted on later dates might observe variations in the behavior of the products.

Of the 163 EdTech products reviewed, 145 (89 percent) appeared to engage in data practices that put children’s rights at risk, contributed to undermining them, or actively infringed on these rights. These products monitored or had the capacity to monitor children, in most cases secretly and without the consent of children or their parents, in many cases harvesting data on who they are, where they are, what they do in the classroom, who their family and friends are, and what kind of device their families could afford for them to use.

Most online learning platforms installed tracking technologies that trailed children outside of their virtual classrooms and across the internet, over time. Some invisibly tagged and fingerprinted children in ways that were impossible to avoid or get rid of—even if children, their parents, and teachers had been aware and had the desire and digital literacy to do so—without throwing the device away in the trash.

Most online learning platforms sent or granted access to children’s data to third-party companies, usually advertising technology (AdTech) companies. In doing so, they appear to have permitted the sophisticated algorithms of AdTech companies the opportunity to stitch together and analyze these data to guess at a child’s personal characteristics and interests, and to predict what a child might do next and how they might be influenced. Access to these insights could then be sold to anyone—advertisers, data brokers, and others—who sought to target a defined group of people with similar characteristics online.

Children are surveilled at dizzying scale in their online classrooms. Human Rights Watch observed 145 EdTech products directly sending or granting access to children’s personal data to 196 third-party companies, overwhelmingly AdTech. Put another way, the number of AdTech companies receiving children’s data was discovered to be far greater than the EdTech companies sending this data to them.

Some EdTech products targeted children with behavioral advertising. By using children’s data—extracted from educational settings—to target them with personalized content and advertisements that follow them across the internet, these companies not only distorted children’s online experiences, but also risked influencing their opinions and beliefs at a time in their lives when they are at high risk of manipulative interference. Many more EdTech products sent children’s data to AdTech companies that specialize in behavioral advertising or whose algorithms determine what children see online.

It is not possible for Human Rights Watch to reach definitive conclusions as to the companies’ motivations in engaging in these actions, beyond reporting on what we observed in the data and the companies’ and governments’ own statements. In response to requests for comment, several EdTech companies denied collecting children’s data. Some companies denied that their products were intended for children’s use, or stressed that their virtual classroom pages for children’s use had adequate privacy protections, even if Human Rights Watch’s analysis found that pages adjacent to the virtual classroom pages (such as the login page, home page or adjacent page with children’s content) did not. AdTech companies denied knowledge that the data was being sent to them, indicating that in any case it was their clients’ responsibility not to send them children’s data.

Governments bear the ultimate responsibility for failing to protect children’s right to education. With the exception of a single government—Morocco—all governments reviewed in this report endorsed at least one EdTech product that risked or undermined children’s rights. Most EdTech products were offered to governments at no direct financial cost to them; in the process of endorsing and ensuring their wide adoption during Covid-19 school closures, governments offloaded the true costs of providing online education onto children, who were unknowingly forced to pay for their learning with their rights to privacy, access to information, and potentially freedom of thought.

Many governments put at risk or violated children’s rights directly. Of the 42 governments that provided online education to children by building and offering their own EdTech products for use during the pandemic, 39 governments produced products that handled children’s personal data in ways that risked or infringed on their rights. Some of these governments made it compulsory for students and teachers to use their EdTech product, not only subjecting them to the risks of misuse or exploitation of their data, but also making it impossible for children to protect themselves by opting for alternatives to access their education.

Children, parents, and teachers were denied the knowledge or opportunity to challenge these data surveillance practices. Most EdTech companies did not disclose their surveillance of children through their data; similarly, most governments did not provide notice to students, parents, and teachers when announcing their EdTech endorsements.

In all cases, this data surveillance took place in virtual classrooms and educational settings where children could not reasonably object to such surveillance. Most EdTech companies did not allow their students to decline to be tracked; most of this monitoring happened secretly, without the child’s knowledge or consent. In most instances, it was impossible for children to opt out of such surveillance and data collection without opting out of compulsory education and giving up on formal learning altogether during the pandemic.

Remedy is urgently needed for children whose data were collected during the pandemic and remain at risk of misuse and exploitation. Governments should conduct data privacy audits of the EdTech endorsed for children’s learning during the pandemic, remove those that fail these audits, and immediately notify and guide affected schools, teachers, parents, and children to prevent further collection and misuse of children’s data.

In line with child data protection principles and corporations’ human rights responsibilities as outlined in the United Nations Guiding Principles on Business and Human Rights, EdTech and AdTech companies should not collect and process children’s data for advertising. Companies should inventory and identify all children’s data ingested during the pandemic, and ensure that they do not process, share, or use children’s data for purposes unrelated to the provision of children’s education. AdTech companies should immediately delete any children’s data they received; EdTech companies should work with governments to define clear retention and deletion rules for children’s data collected during the pandemic.

As more children spend increasing amounts of their childhood online, their reliance on the connected world and digital services that enable their education will continue long after the end of the pandemic. Governments should develop, refine, and enforce modern child data protection laws and standards, and ensure that children who want to learn are not compelled to give up their other rights in order to do so.

Children should be actively consulted throughout these processes, helping to build safeguards that protect meaningful, safe access to online learning environments that provide the space for children to develop their personalities and their mental and physical abilities to their fullest potential.

Recommendations

To Governments

- Facilitate urgent remedy for children whose data were collected during the pandemic and remain at risk of misuse and exploitation. To do so:

- Conduct data privacy audits of the EdTech endorsed for children’s learning during the pandemic, remove those that fail these audits, and immediately notify and guide affected schools, teachers, parents, and children to prevent further collection and misuse of children’s data.

- Require EdTech companies with failed data privacy audits to identify and immediately delete any children’s data collected during the pandemic.

- Require AdTech companies to identify and immediately delete any children’s data they received from EdTech companies during the pandemic.

- Prevent the further collection and processing of children’s data by technology companies for the purposes of profiling, behavioral advertising, and other uses unrelated to the purpose of providing education.

- Adopt child-specific data protection laws that address the significant child rights impacts of the collection, processing, and use of children’s personal data. Where child data protection laws already exist, update and strengthen implementation measures to deliver a modern child data protection framework that protects the best interests of the child in complex online environments.

- Enact and enforce laws ensuring that companies respect children’s rights and are held accountable if they fail to do so. In line with the United Nations Guiding Principles on Business and Human Rights, such laws should require companies to:

- Conduct and publish child rights due diligence processes.

- Provide full transparency in data supply chains, and publicly report on how children’s data are collected and processed, where they are sent, to whom, and for what purpose.

- Provide child-friendly, age-appropriate processes for remedy and redress for children who have experienced infringements on their rights; such mechanisms should be transparent, independently accountable, and enforceable.

- Require child rights impact assessments in any public procurement processes that provide essential services to children through technology.

- Ban behavioral advertising to children. Commercial interests and behavioral advertising should not be considered legitimate grounds of data processing that override a child’s best interests or their fundamental rights.

- Ban the profiling of children. In exceptional circumstances, governments may lift this restriction when it is in the best interests of the child, and only if appropriate safeguards are provided for by law.

To Ministries and Departments of Education

- Where online learning is adopted as a preferred or hybrid mechanism for delivering education, allocate funding to pay for services that safely enable online education, rather than allowing the sale and trading of children’s data to finance the services.

- Ensure that any services that are endorsed or procured to deliver online education are safe for children. In coordination with data protection authorities and other relevant institutions:

- Require all companies providing educational services to children to identify, prevent, and mitigate negative impacts on children’s rights, including across their business relationships and global operations.

- Require child data protection impact assessments of any educational technology provider seeking public investment, procurement, or endorsement.

- Ensure that public and private educational institutions enter into written contracts with EdTech providers that include protections for children’s data. Children should not be expected to enter into a contract, and children and guardians cannot give valid consent when it cannot be freely refused without jeopardizing a child’s right to education.

- Define and provide special protections for categories of sensitive personal data that should never be collected from children in educational settings, such as precise geolocation data.

- Provide child-friendly, age-appropriate, and confidential reporting mechanisms, access to expert help, and provisions for collective action in local languages for children seeking justice and remedy. Such measures should avoid placing undue burden or exclusive responsibility on children or their caregivers to seek remedy from companies by acting individually or exposing themselves in the process.

- Develop and promote digital literacy and children’s data privacy in curricula. Provide training programs for ministry staff, teachers, and other school staff in digital literacy skills and protection of children’s data privacy, to support teachers to conduct online learning for children safely.

- Seek out children’s views in developing policies that protect the best interests of the child in online educational settings, and meaningfully engage children in enhancing the positive benefits that access to the internet and educational technologies can provide for their education, skills, and opportunities.

To Education Technology Companies

- Provide urgent remedy and redress where children’s rights have been put at risk or infringed through companies’ data practices during the pandemic. To do so:

- Immediately stop collecting and processing children’s data for user profiling, behavioral advertising, or any purpose other than what is strictly necessary and relevant for the provision of education.

- Stop sharing children’s data for purposes that are unnecessary and disproportionate to the provision of their education. In instances where children’s data are disclosed to a third party for a legitimate purpose, in line with child rights principles and data protection laws, enter into explicit contracts with third-party data processors, and apply strict limits to their processing, use, and retention of the data they receive.

- Apply child flags to any data shared with third parties, to ensure that adequate notice is provided to all companies in the technology stack that they are receiving children’s personal data, and thus obliged to apply enhanced protections in their processing of this data.

- Inventory and identify children’s personal data ingested during the pandemic, and take measures to ensure that these data are no longer processed, shared, retained, or used for commercial or other purposes that are not strictly related to the provision of children’s education.

- Companies with EdTech products designed for use by children should stop collecting specific categories of children’s data that heighten risks to children’s rights, including their precise location data and advertising identifiers.

- Undertake child rights due diligence to identify, prevent, and mitigate companies’ negative impact on children’s rights, including across their business relationships and global operations, and publish the outcomes of this due diligence process.

- Respect and promote children’s rights in the development, operation, distribution, and marketing of EdTech products and services. Ensure that children’s data are collected, processed, used, protected, and deleted in line with child data protection principles and applicable laws.

- Provide privacy policies that are written in clear, child-friendly, and age-appropriate language. These should be separate from legal and contractual terms for guardians and educators.

- Provide children and their caregivers with child-friendly mechanisms to report and seek remedy for rights abuses when they occur. Remedies should involve prompt, consistent, transparent, and impartial investigation of alleged abuses, and should effectively end ongoing infringements on rights.

To Advertising Technology Companies and other Third-Party Companies that May Receive Data from EdTech Products

- Inventory and identify all children’s data received through tracking technologies the technology companies own and take measures to promptly delete these data and ensure that these data are not processed, shared, or used. To do so:

- Identify all apps and websites that have installed tracking technologies owned by technology companies and transmitted user data to them.

- Of these, classify and create a list of services primarily directed at children, which should be monitored and updated periodically. Notify the parent companies of these services that they need to provide explicit evidence that their service is not made for children to remove their product from this list.

- Using this list, companies should review and promptly delete any children’s data received from services made for children.

- Prevent the use of technology companies’ tracking technologies to surveil children, or any user of these child-directed services designed for use by children.

- Regularly audit incoming data and the companies sending them. Delete or otherwise disable the use of any received children’s data or user data received from child-directed services designed for use by children, when detected.

- Notify and require companies and clients that use AdTech tracking technologies to declare any children’s data collected through these tools with a child flag or through other means, so that tagged data can be automatically flagged and deleted before transmission to third-party companies.

- Develop and implement effective processes to detect and prevent the commercial use of children’s data collected by technology companies’ tracking technologies.

- Undertake child rights due diligence to identify, prevent, and mitigate technology companies’ impact on children’s rights, including across their business relationships and across global operations, and publish the outcomes of this due diligence process.

- Provide children and their caregivers with child-friendly mechanisms to report and seek remedy for infringements on rights when they occur. Remedies should involve prompt, consistent, transparent, and impartial investigation of alleged infringements, and should end ongoing violations.

Methodology

This report covers 49 countries that recommended 163 educational technology (EdTech) products for children to use for online learning during Covid-19 school closures.

Human Rights Watch conducted technical analysis on each product to assess how it handled children’s data, then compared the results to the product’s privacy policy to determine whether the EdTech company disclosed its data practices to children and their caregivers. Human Rights Watch also examined the advertising technology (AdTech) companies and data brokers found to receive children’s data, and analyzed the marketing materials and developer documentation of those found to be receiving significant amounts of children’s data.

The methods used in this report were free and available for use by governments prior to endorsing or procuring any of the EdTech products analyzed here. While a tool that was used to analyze websites, Blacklight, was published in September 2020, the tests it runs to identify privacy-infringing technologies were individually available and free to use in the form of various privacy census tools built over the past decade. As of November 2021, no government reviewed in this report was found to have undertaken a technical privacy evaluation of the EdTech products they recommended after the declaration of the pandemic in March 2020.

Human Rights Watch invites experts, journalists, policymakers, and readers to recreate, test, and engage with our findings and research methods. Our datasets, preserved evidence, and a detailed technical methodology can be found online.

Selection Criteria

Human Rights Watch examined the Covid-19 education emergency response plans, documents, and announcements of 68 of the world’s most populous countries. Of these, 49 countries adopted online learning as a component of their national plans for continued learning throughout school closures. The EdTech products endorsed or procured by these ministries or departments of education were included for analysis in this report.

In countries where the education ministry recommended a large number of EdTech products—in some cases, numbering in the hundreds—a Mersenne Twister pseudorandom number generator was used to randomly select a maximum of ten products that would serve as an illustrative sample of that education ministry’s decisions.[2]

Seven countries—Australia, Brazil, Canada, Germany, India, Spain, and the United States—delegate significant decision-making authority to state- or regional-level education authorities. During the pandemic, this included decisions about what EdTech to endorse or procure for school use. Human Rights Watch identified the two most populous states or provinces in these countries and included their EdTech endorsements for analysis. Similarly for the United Kingdom, the two most populous constituent countries—England and Scotland—were identified for analysis.

As a result, 163 products were analyzed from the following 49 countries: Argentina, Australia (New South Wales, Victoria), Brazil (Minas Gerais, São Paulo), Burkina Faso, Cameroon, Canada (Quebec)[3], Chile, China, Colombia, Côte d'Ivoire, Ecuador, Egypt, France, Germany (Baden-Württemberg, Bavaria), Ghana, Guatemala, India (Maharashtra, national, Uttar Pradesh), Indonesia, Iran, Iraq, Italy, Japan, Kazakhstan, Kenya, Malawi, Malaysia, Mexico, Morocco, Nepal, Nigeria, Pakistan, Peru, Poland, Republic of Korea, Romania, Russian Federation, Saudi Arabia, South Africa, Spain (Andalucía, Catalonia), Sri Lanka, Taiwan, Thailand, Turkey, United Kingdom (England, Scotland), United States (California, Texas), Uzbekistan, Venezuela, Vietnam, and Zambia.

Product Types

Of the 163 EdTech products investigated by Human Rights Watch, 39 were mobile applications (“apps”), 90 were websites, and 34 were available in both formats. Of the products available in both app and website formats, Human Rights Watch analyzed both, except for four products where the app versions were no longer available online, or offered only in iOS, Apple’s operating system.

Apps running on Google’s Android operating system are the focus of this report. Android is the dominant mobile operating system worldwide, in large part due to the ubiquity of lower-cost mobile phones that run Android.[4] Children living in the countries covered by this report are more likely to have access to an Android device, if they have access to a device at all. This was reflected in the choices that governments made: almost all EdTech products endorsed by the governments covered in this report offer their apps for the Android platform.

In addition, Android’s open architecture makes it possible to easily access and observe the interactions between an app and the operating system, as well as to identify the data transmissions from the device running the app to online servers.

While this report focuses on apps built for Android, apps built for Apple’s iOS can also employ data tracking technologies and target behavioral advertising to users.[5]

Access and Archival

To investigate how EdTech products handled children’s data and their rights, Human Rights Watch downloaded a copy of the latest version of the product and its privacy policy between February 19 and March 15, 2021. Human Rights Watch conducted the primary phase of its investigation between March and August 2021, and conducted further checks in November 2021 to verify findings.

To preserve documentation and to invite readers to recreate, test, and engage with our findings, the privacy policy, and EdTech website or app were archived, whenever available, on the Internet Archive’s Wayback Machine. The versions of the EdTech apps examined by Human Rights Watch are listed in the appendices.

EdTech products were sorted into the following categories:

- Products that do not require a user account to access learning content;

- Products that offer the choice to sign up for an optional user account;

- Products that require a user account to access learning content; and

- Products that require verification of the child’s identity as a student, either by their school or their ministry of education, to set up a mandatory account to access the service.

To avoid misleading EdTech companies as to our affiliation and the nature of our research, no user accounts were created for products identified in categories 1, 2, and 4.

Human Rights Watch created user accounts for a limited number of EdTech products in category 3. As it is possible to disassemble and analyze apps’ code without having to sign into a user account, accounts were created only for 27 websites in this category to test for privacy violations in the same environment used by children to attend classes. In these instances, Human Rights Watch explicitly identified the nature of our engagement, populating mandatory input fields with the following values to signal our affiliation and intent. Optional fields were left blank.

Email: iamaresearcher@hrw.org

User name: hrwresearcher

Organization / School name: Human Rights Watch

First Name: HRW

Last Name: Researcher

Phone number: [a real number]

Throughout its investigation, Human Rights Watch did not interact with other users or enter into virtual classrooms.

Human Rights Watch did not create user accounts for products in category 4, as that would have entailed falsely assuming the identity of a real student. For these websites, technical analysis was restricted to webpages that children likely had to interact with in order to access their virtual classroom, prior to logging in, such as the product’s home page or login page.

Some of the companies that offered EdTech products in category 4 told Human Rights Watch that the virtual classrooms and related spaces accessible to children after the login were adequately protective of privacy. These companies asserted that the pages before their product's login (e.g., the login page, home page, or adjacent page designed for children) were designed for use by teachers, parents and other adults, and not properly described as designed for children's use.

Technical Analysis: Apps

There are two methods of disassembling and analyzing a mobile app. The first is through static analysis, which analyzes an app’s code and identifies its capabilities, or the functions and instructions that may be executed when the app is run. The second is through dynamic analysis, which runs the app under realistic conditions and observes what data is transmitted where, and to whom.

Human Rights Watch conducted manual static analysis tests on 73 apps, using Android Developer Studio to decompile the app and to analyze its code. All results were verified by scanning each app using Pithus, an open source mobile threat intelligence platform that conducts automated static analysis tests on mobile apps, and εxodus by εxodus Privacy, an open source privacy auditing platform that scans for trackers embedded in Android apps, and corroborating the results against Human Rights Watch’s analyses.[6]

Additionally, Human Rights Watch commissioned Esther Onfroy, founder of Defensive Lab Agency, and the creator of both Pithus and εxodus Privacy, to conduct in-depth static and dynamic analysis on eight apps, which were used as a final check to ensure the accuracy of our results.

Dynamic Analysis and Children’s Participation

Human Rights Watch collaborated with four children from India, Indonesia, South Africa, and Turkey who participated in an in-depth investigation to uncover how an EdTech app recommended by their government handled their privacy.

These children and their guardians were informed of the nature and purpose of our research, that they would receive no personal service or benefit for speaking to us, and our intention to publish a report with the information gathered. Human Rights Watch requested and received consent from the children and their guardians, and informed each that they were under no obligation to speak with us or to participate in the project.

Human Rights Watch asked each child to download a virtual private network (VPN) and the EdTech app on their mobile device. They were then asked to open, run, and close the VPN and the EdTech app several times within a single day, interacting with the app as if they were using it for school or for learning. After 24 hours, children deleted both from their phones.

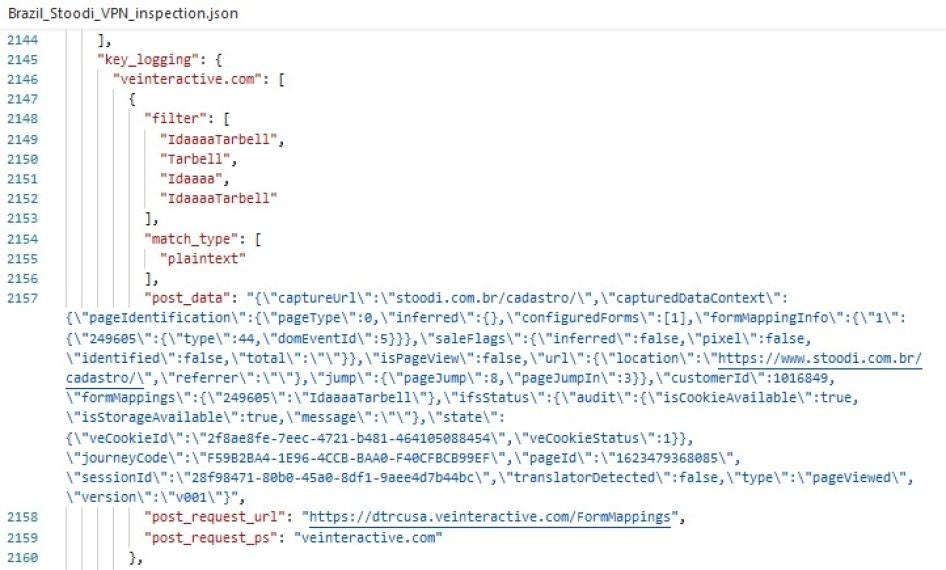

Esther Onfroy of the Defensive Lab Agency received the data files and analyzed them to identify data flows and transmissions. These findings were corroborated against dynamic analysis conducted on each app, using a VPN to simulate app usage in the child’s country. This methodology design maximally protected children’s privacy by encrypting the child’s data and ensuring that only the data flows could be analyzed, without revealing the substance of children’s personal data.

All children’s data were securely stored, then deleted, at the end of the investigation. The data files for one child’s experiment were provided to the child, at their request.

Technical Analysis: Websites

To understand how websites handle children’s data, Human Rights Watch used Blacklight, a real-time website privacy inspector built by Surya Mattu, senior data engineer and investigative data journalist at The Markup.[7]

Released in September 2020, Blacklight emulates how a user might be surveilled while browsing the web.[8] The tool scans any website, runs tests for seven known types of surveillance, and returns an instant privacy analysis of the inspected site. Built on the foundation of robust privacy census tools built over the past decade, Blacklight monitors scripts and network requests to observe when and how user data is being collected, and records when this data is being sent to known third-party AdTech companies.[9]

Blacklight exists in two formats: as a user-friendly interface on The Markup’s website, and as an open source command-line tool.[10] Human Rights Watch chose to work with the latter, as it provides the flexibility to adapt the tool to provide customized analysis, as well as a higher observational power that yields fine-grained evidence of the surveillance it detects on websites. Surya Mattu of The Markup generously assisted Human Rights Watch in customizing Blacklight for this investigation.

In order to recreate the experience of a child using an EdTech website in their country, and how their data might be collected, handled, and sent to third parties, Human Rights Watch conducted all technical tests while running a VPN set to the country where the product was endorsed by the government for children’s education. This proved essential: early tests conducted by Human Rights Watch found that the prevalence of surveillance technologies embedded in a website changed depending on the country the website believed that its user was located. Many of the observed differences appeared to be related to that country’s data protection laws, where they exist.

Human Rights Watch selected for examination websites that were explicitly recommended by governments for use for children’s online education. In response to Human Rights Watch's findings, some companies noted that their government-recommended products were designed for use by teachers, parents and other adults, and not for use by children. Accepting those claims as fact, this still raises the question of why the governments recommended pages for use by children that were not adequately vetted to protect their privacy, as well as the question of whether the companies should have changed their privacy practices on those pages once the government made its recommendation.

Technical Limitations

Analyzing apps using static analysis may yield false positives, as not all of the app’s source code might be implemented in practice when a user runs the app. Put another way, an app may not use all of the programmed functionalities of which it is capable. Human Rights Watch notes this limitation by distinguishing between analysis of the code’s capabilities (static analysis) and detections of actual transmission of children’s data (dynamic analysis) throughout the report.

A technical analysis does not definitively determine the intent of any particular tracking technology, or how the collected data is used. For example, an EdTech product can include third party computer code that collects information that may be useful to monitor the product’s performance and stability. The same data collected by the same third-party code may also be used in tandem with other third-party code to enable data collection for advertising or other marketing purposes. In a static analysis, it is not possible to conclude whether user data were collected, or the scope or purpose of the data collection. Neither is it possible solely with a technical analysis to determine how the collected data is used by the third party.

As another example, third-party computer code embedded in a product to perform an administrative function can be designed also to enable access to a device’s camera, microphone, or another feature. In a static analysis, it is possible to detect the capability, but not whether the capability is utilized. In addition, the EdTech company implementing such third-party code for an administrative function may not have plans to enable those features, and may not be aware of the possibility. Note also that access by any code to an Android device’s camera or microphone is possible only if the user settings on the device enable such sharing.

Where possible, Human Rights Watch worked to reduce ambiguity by examining the parent companies that own the tracking technologies found in an EdTech product, as well as the companies found to receive transmissions of children’s data. Human Rights Watch conducted further analysis on companies that receive, analyze, trade, or sell people’s personal data for commercial and other purposes, and reviewed their publicly available marketing materials and developer documentation.

Blacklight’s analysis is limited by three other factors: the simulation may trigger different surveillance responses from the website under examination, because it is a simulation of user behavior, not actual user behavior; the possibility of producing false positives while scanning for canvas fingerprinting; the possibility of producing false negatives through a stack tracing technique. Further investigation by The Markup determined that the probability of these false errors occurring is very low, and that Human Rights Watch’s methodology design may have further reduced this risk.[11] A detailed discussion of these technical limitations can be found on Blacklight’s methodology, available online.[12]

For readers seeking to replicate Human Rights Watch’s findings, it is important to note that the observed behavior of these apps and websites, and the detected prevalence and frequency of tracking technologies embedded in them, may fluctuate. This is influenced by multiple factors, including the geographical location of the user, date and time of testing, and the device or browser type, among other variables. In addition, apps and websites that use AdTech services to offer advertisers and other third-party companies the opportunity to target their students with ads through an electronic high-frequency trading process known as real-time bidding, further described in Chapter 1, may yield different results as to the recipient of the children’s data, as different third parties may have won the bid each time.

Human Rights Watch conducted manual analysis on four websites—Distance Learning (Cameroon), Eduyun (China), Smart Revision (Zambia), and e-learning portal (Zambia)—on which Blacklight tests failed for a variety of technical failures. One site was incompatible with the browser used by Blacklight, and another refused to load upon detecting the VPN service used by Human Rights Watch. The manual analysis conducted on these four sites followed the same methodology used by the Blacklight tool.

Interviews with Children, Parents, and Teachers

Human Rights Watch interviewed students, parents, and teachers between April 2020 and April 2021 about their experiences with online learning. Interviewees were based in the following 17 countries: Australia, Chile, Denmark, Germany, Indonesia, India, Iran, Italy, Lebanon, Republic of Korea, Russia, Serbia, Spain, South Africa, Turkey, United Kingdom, and the United States.

Interviewees lived in capital cities, other cities, Indigenous communities, rural and remote locations, suburbs, towns, and villages.

Interviews were conducted directly, or with interpretation, in Arabic, Bahasa Indonesian, Danish, English, Ewe, Farsi, German, Hindi, Italian, Korean, Russian, Serbian, Spanish, and Turkish.

Interviewees were not paid to participate. Interviewees were informed of the purpose of the interview, its voluntary nature, and the ways in which the information would be used. They provided oral and written consent to be interviewed.

Many parents and teachers requested that their names not be used in this report to protect their privacy or the privacy of their children or students, or to feel free to speak about their school, or for cultural reasons. Children’s identities are protected with pseudonyms of their own choosing. Pseudonyms are reflected in the text with a first name followed by an initial and are noted in the footnotes.

Requests for Comment

Human Rights Watch shared the findings presented in this report with 95 EdTech companies, 199 AdTech companies, and the 49 governments covered in this report, and gave them the opportunity to respond and provide comments and clarifications. Of these, 48 EdTech companies, 78 AdTech companies, and 10 governments responded as of May 24, 2022 at 12:00pm EDT.

I. Covid-19, Education, and Technology

There were no doubts that the online platforms and tools used could be unsafe. It was never questioned.

—A single mother of two school-aged boys, Izhevsk, Udmurt Republic, Russia[13]

Covid-19 and Children’s Education

The novel coronavirus has devastated children’s education around the world.[14] On March 11, 2020, the World Health Organization (WHO) declared that an outbreak of Covid-19 had reached global pandemic levels. Within weeks, almost every country in the world closed down their schools in an attempt to stop the spread of Covid-19, upending the lives and learning of 1.6 billion children and young adults, or 90 percent of the world’s students.[15] By March 2021, a full year into the pandemic, half of the global student population remained shut out of school.[16]

Most countries pivoted to some form of online learning, replacing physical classrooms with phones, tablets, and computers.[17] This deepened existing inequities in children’s access to education, in the form of digital divides between children with access to technologies critical for online learning, and those without. It also created a dependence and need for affordable, reliable connectivity and devices so overwhelming that it triggered global shortages for both. Supply chains for computers buckled under staggering demand, as shortages of essential parts created two-year shipment delays worldwide and pitted desperate schools and education ministries against one another.[18] As more people became heavily reliant on the internet to work, communicate, play, and study during Covid-19 lockdowns, the resulting explosion of traffic clogged the internet and dumped unprecedented stress on its infrastructure. Nine days after the WHO’s pandemic declaration, the European Commission took the extraordinary step of asking internet companies, video streaming services, and gaming platforms to reduce their services in Europe to reserve bandwidth for work and education.[19]

Teachers and schools faced a bewildering array of digital platforms to choose from as they scrambled to set up virtual classrooms. In response, governments issued endorsements of educational technologies (EdTech) for use. Some governments rapidly signed contracts with EdTech companies to purchase millions of licenses for teachers and students.[20]

As a result, EdTech companies experienced explosive, unprecedented demand for their products. In the days and weeks after the WHO’s pandemic declaration, education app downloads worldwide surged 90 percent compared to the weekly average at the end of 2019.[21] Children spent significantly more time online in virtual classrooms; by September 2020, the number of hours spent in education apps globally each week had increased to an estimated 100 million hours, up 90 percent compared to the same period in 2019.[22]

Google Classroom, Google’s teacher-student communication platform, reported that the pandemic had almost quadrupled its users to more than 150 million, up from 40 million in 2019; similarly, G Suite for Education, Google’s classroom software, reported doubling its users to more than 170 million students and educators.[23] “We have seen incredible growth,” Javier Soltero, a vice president at the company, said in an interview with Bloomberg. “It actually mirrors, unfortunately, the ramp up and spread of the disease.”[24]

The explosive demand also generated record revenues and profits. As the global economy plummeted, venture capital financing for EdTech startups surged to a record-setting US$16.1 billion in 2020, more than doubling the $7 billion raised in 2019.[25] Two companies, Byju’s and Yuanfudao, became the first EdTech companies to achieve “decacorn” status—an exclusive group of the world’s most valuable privately-held companies, valued at more than $10 billion—after attracting millions of new students and closing successful financing rounds during the pandemic.[26]

Technology companies that provided free services to schools also benefited, gaining significant market share as millions of students became familiar with their product. Zoom Video Communications, which provided free services to more than 125,000 schools in 25 countries, as well as limited free services for the general public, reported its sales skyrocketing 326 percent to $2.7 billion and its profits propelled from $21.7 million in 2019 to $671.5 million in 2020.[27]

The use of EdTech helped governments to fill urgent gaps and deliver some measure of learning during the pandemic. However, governments’ endorsements and procurements of EdTech also turbocharged the mass collection of children’s data, exposing their personal information to the risk of misuse and exploitation by the advertising-driven internet economy and resulting in the mass surveillance of children’s lives, both inside and outside of the classroom.

How the Internet-Based Economy Works

We don’t monetize the things we create. We monetize users.

—Andy Rubin, creator of Android, the world’s most widely used mobile operating system[28]

Today’s internet is powered by the advertising technology (AdTech) industry. Motivated by the belief that personalized ads are more persuasive and therefore more lucrative, AdTech companies collect massive troves of data about people to target them with ads tailored to their presumed interests and desires. The revenue generated by digital advertising pays for most of the services available on the internet today.

Most internet companies offer their website, app, or content for free, or charge a negligible fee that does not reflect the full cost of offering these services. Instead of asking people to pay for these services with money, companies require people to give up their data and attention, often without their knowledge or meaningful consent.[29] Companies then traffic their users’ data into a complex ecosystem of AdTech companies, data brokers, and others in a set of highly profitable transactions that make up a $378.16 billion industry.[30]

Here’s how a child using an EdTech app to attend her school online might interact with the AdTech industry. This illustration similarly describes a child’s experience using an EdTech website to attend her school online.

- EdTech companies that make educational apps for children decide to send a child’s personal data to third-party companies and possibly to sell ads in their apps, in order to generate revenue.

- AdTech companies help put ads in apps. They make packages of code, such as software development kits (SDKs) and other tracking technologies, for app makers to insert into their apps to personalize and display ads to their users. When this code is installed in the app, this code collects data that may be used by the AdTech company to target advertising, whether on the EdTech product or on another site or app.

- A child opens the EdTech app that their school uses for online learning and logs in for class.

- Instantly, the app begins to collect personal data about the child. This can include who the child is, where she is, what she does, who she interacts with in her virtual classroom, and what kind of device her parents can afford for her to use.

- This data can be sent to AdTech companies, either by the EdTech app, or directly by the AdTech SDKs embedded in the app. In the process, AdTech companies assign an ID number to the child, so that they can piece together the data they receive to build a profile on her.

- Some AdTech companies will also follow the child across the internet and over time. Some may search for even more information about her from public and private sources, adding definition and detail to an intimate profile of the child.

- AdTech companies’ sophisticated algorithms may analyze the trove of data received from the app. They can guess at the child’s personal characteristics and interests (for example, that she’s likely to be female), and predict her future behavior (this child is likely to buy a toy).

- AdTech companies may use these insights to sell to advertisers the ability to target ads to people. These targeted ads can appear on other apps and websites. This happens through real-time bidding platforms, where algorithms engage in a high-frequency auction amongst advertisers to sell off the chance to show an ad to a user—in this case, a child—to the highest bidder. From start to finish, the automated process of buying and selling between advertisers takes less than a hundred milliseconds and takes place tens of billions of times each day.[31]

- These insights can also be sold or shared with data brokers, law enforcement and governments, or others who wish to target a defined group of people with similar characteristics online.

A handful of the world’s most valuable internet companies own entire AdTech supply chains. Alibaba, Amazon, Facebook (Meta),[32] Google, Microsoft, Tencent, and Yandex offer digital services that serve as the primary channels that most of the world relies on to engage with the internet.[33] In turn, they collect extensive data about the billions of people who use or interact with these platforms. They analyze this data to infer and create new information about people, then commercialize those insights for advertising—often on their own real-time bidding platforms.

These AdTech companies may also draw upon their vast troves of data to build and offer finely-tuned tracking technologies, prediction models, and microtargeting tools to help advertisers reach their audiences. As further described in Chapter 3, these tools are embedded in most websites and apps that people use every day, enabling these AdTech companies to collect and receive data not just from people directly using their services, but from anyone who encounters their data tracking embedded across the internet. The unparalleled power of these dominant tech companies to collect, track, and combine data across much of the internet results in a powerful and pervasive surveillance of people’s lives that is extremely difficult to avoid.[34]

II. Hidden Surveillance: Children’s Data Harvested

How dare they? How dare [these companies] peep into my private life?

—Rodin R., 9-year-old student, Istanbul, Turkey[35]

Children’s Data and their Right to Privacy

Privacy is a human right.[36] Recognized under international and regional human rights treaties, this right encompasses three connected components: the freedom from intrusion into our private lives, the right to control information about ourselves, and the right to a space in which we can freely express our identities.[37]

Privacy is about autonomy and control over one’s life. It is the ability to define for ourselves who we are to the world, on our own terms. This is especially important for children, who are entitled to special protections that guard their privacy and the space for them to grow, play, and learn.[38]

Children’s privacy is vital to ensuring their safety, agency, and dignity.[39] At school, privacy enables the very purpose of education by providing the space for children to develop their personalities and abilities to their fullest potential.[40] For children who are survivors of abuse, privacy might mean the freedom to live safely, without exposing where they live, play, and go to school.[41] For lesbian, gay, bisexual and transgender (LGBT) children, privacy could mean the difference between seeking life-saving information and being sent to jail, or worse.[42]

As children spend increasing amounts of their lives online, international human rights bodies have recognized that even the mere generation, collection, and processing of a child’s personal data can threaten their privacy, because in the process they lose control over information that could put their privacy at risk.[43] Data about children’s identities, activities, communications, emotions, health, and relationships merit special consideration, as the handling of such data may result in arbitrary or unlawful abuses of children’s privacy and in harms that may continue to affect them later in life.[44]

The United Nations Committee on the Rights of the Child has emphasized that any digital surveillance of children, together with any associated automated processing of their data, should not be conducted routinely, indiscriminately, or without the child’s knowledge or, in the case of very young children, that of their parent or caregiver.[45] Moreover, it should not take place “without the right to object to such surveillance, in commercial settings and educational and care settings,” and “consideration should always be given to the least privacy-intrusive means available to fulfil the desired purpose.”[46] Any restriction upon a child’s privacy is only permissible if it meets the standards of legality, necessity, and proportionality.[47]

The unprecedented, mass use of education technologies (EdTech) by schools during the pandemic without adequate privacy protections drastically compromised children’s right to privacy. Recognizing this, the UN special rapporteur on the right to privacy warned that, “Schools and educational processes need not and should not undermine the enjoyment of privacy and other rights, wherever or however education occurs.”[48]

As described below, many EdTech products endorsed by governments and used by children to continue learning during Covid-19 school closures were found to harvest children’s data unnecessarily and disproportionately, for purposes unrelated to their education. Worse still, this data collection took place in virtual classrooms and educational settings online, without giving children the ability to object to such surveillance.[49] In most instances, it was impossible for children to opt out of such data collection without opting out of compulsory schooling and giving up on learning altogether during the pandemic.

Finding Out Who Children Are

To figure out who people are on the internet, advertising technology (AdTech) companies tag each person with a string of numbers and letters that acts as an identifier number that is persistent and unique: it points to a single child or their device, and it does not change.[50] While the tools described in this discussion are ascribed to AdTech companies, the same tools can be used by other companies, including EdTech companies, to collect data about how their users (including children) use the product. Information about how a user or customer interacts with the product is useful, for example, for the company to improve its product and user experience. In our discussion in this section, we focus our discussion to AdTech companies to simplify the discussion, but the same concepts apply to technology companies that are not in AdTech.

Persistent identifiers enable AdTech companies to infer the interests and characteristics of individual children. Every time a child connects to the internet and comes into contact with tracking technology, any information collected about that child—where they live, who their friends are, what kind of device their family can afford for them—is tied back to the identifier associated with them by that AdTech company, resulting in a comprehensive profile over time. Data tied together in this way do not need a real name to be able to target a real child or person.

In addition, computers can correctly re-identify virtually any person from an anonymized dataset, using just a few random pieces of anonymous information.[51] Given the risks of re-identification, many existing data protection laws recognize persistent identifiers as personal information, granting them the same considerations and legal protections.[52]

Some persistent identifiers are built solely to be used for advertising. Other identifiers identify and track people across multiple devices, across the internet, or trail them from the online world into the physical world. And some identifiers are so inescapably tenacious that they are impossible to avoid or get rid of, without throwing one’s device away in the trash.

Apps: Persistent Identifiers

Advertising Identifiers

Of the 73 EdTech apps examined by Human Rights Watch, 41 apps (56 percent) were found with the ability to collect their users’ advertising IDs. This allowed these apps to tag children and identify their devices for the sole purpose of advertising to them.

An advertising ID is a persistent identifier that exists for a single use: to enable advertisers to track a person, over time and across different apps installed on their device, for advertising purposes. For those using an Android device, this is called the Android Advertising ID (AAID). An AAID is neither necessary nor relevant for an app to function; Google’s developer guidelines stipulate that app developers must “only use an Advertising ID for user profiling or ads use cases.”[53]

The 41 apps that were found to have the capability to collect AAID were endorsed by 29 governments for children’s learning during Covid-19. Altogether, these apps may identify, tag, and track an estimated 6.24 billion users, including children.

Of these, 33 apps appear to have the ability to collect AAID from an estimated 86.9 million children, because their own materials describe and appear to market them for children’s education, with children apparently intended as their primary users.

|

App |

Country |

Apparently designed for use by children? |

Developer |

Estimated Users[54] |

|

Minecraft: Education Edition |

Australia: Victoria |

Yes |

Private |

500,000 |

|

Cisco Webex |

Australia: Victoria, Japan, Poland, Spain, Republic of Korea, Taiwan, United States: California |

No |

Private |

1,000,000 |

|

Descomplica |

Brazil: São Paulo |

Yes |

Private |

1,000,000 |

|

Stoodi |

Brazil: São Paulo |

Yes |

Private |

1,000,000 |

|

Storyline Online |

Canada: Quebec |

Yes |

Private |

50,000 |

|

Remind |

Colombia |

Yes |

Private |

10,000,000 |

|

Dropbox |

Colombia |

No |

Private |

1,000,000,000 |

|

Edmodo |

Colombia, Egypt, Ghana, Nigeria, Romania, Thailand |

Yes |

Private |

10,000,000 |

|

Padlet |

Colombia, Germany: Bavaria, Romania |

No |

Private |

5,000,000 |

|

SchoolFox |

Germany: Bavaria |

Yes |

Private |

100,000 |

|

itslearning |

Germany: Bavaria |

Yes |

Private |

1,000,000 |

|

Ghana Library App |

Ghana |

No |

Government |

10,000 |

|

Diksha |

India: Maharashtra, National, Uttar Pradesh) |

Yes |

Government |

10,000,000 |

|

e-Pathshala |

India: Maharashtra, National, Uttar Pradesh) |

Yes |

Government |

1,000,000 |

|

Rumah Belajar |

Indonesia |

Yes |

Government |

1,000,000 |

|

Quipper |

Indonesia |

Yes |

Private |

1,000,000 |

|

Ruangguru |

Indonesia |

Yes |

Private |

10,000,000 |

|

Kelas Pintar |

Indonesia |

Yes |

Private |

1,000,000 |

|

Shad |

Iran |

Yes |

Government |

18,000,000[55] |

|

Newton |

Iraq |

Yes |

Government |

50,000 |

|

WeSchool |

Italy |

Yes |

Private |

1,000,000 |

|

schoolTakt |

Japan |

Yes |

Private |

1,000 |

|

Study Sapuri |

Japan |

Yes |

Private |

500,000 |

|

Bilimland |

Kazakhstan |

Yes |

Private |

500,000 |

|

Daryn Online |

Kazakhstan |

Yes |

Private |

1,000,000 |

|

Kundelik |

Kazakhstan |

Yes |

Private |

1,000,000 |

|

Muse |

Pakistan |

Yes |

Private |

10,000 |

|

Taleemabad |

Pakistan |

Yes |

Private |

1,000,000 |

|

Naver Band |

Republic of Korea |

No |

Private |

50,000,000 |

|

KakaoTalk |

Republic of Korea |

No |

Private |

100,000,000 |

|

Miro |

Romania |

No |

Private |

1,000,000 |

|

Kinderpedia |

Romania |

Yes |

Private |

10,000 |

|

My Achievements |

Russian Federation |

Yes |

Government |

100 |

|

iEN |

Saudi Arabia |

Yes |

Government |

500,000 |

|

Extramarks |

South Africa |

Yes |

Private |

100,000 |

|

Nenasa |

Sri Lanka |

Yes |

Government |

50,000 |

|

PaGamO |

Taiwan |

Yes |

Private |

100,000 |

|

|

Taiwan |

No |

Private |

5,000,000,000 |

|

Eğitim Bilişim Ağı |

Turkey |

Yes |

Government |

10,000,000 |

|

Özelim Eğitimdeyim |

Turkey |

Yes |

Government |

500,000 |

|

Schoology |

US: Texas |

Yes |

Private |

5,000,000 |

None of these apps allowed their users to decline to be tracked. In fact, this data collection is invisible to the child, who simply sees the app’s interface on their device. This activity is even more covert in 27 apps that fail to inform their students—either through their privacy policy, or elsewhere on their product—that the app and its embedded third-party AdTech trackers may collect their device’s AAIDs in order to track, profile, and target students with advertising. In doing so, these apps deny children, parents, and teachers knowledge of this practice and the ability to consent, and impede their right to effective remedy (as discussed in Chapter 4).[56]

Collectively, these EdTech apps may have provided 33 AdTech companies with access to their students’ AAIDs. This was done through software development kits (SDKs), or packages of code embedded in an EdTech app that can be used to facilitate the transmission of users’ personal data to advertisers.

When reached for comment, Cisco stated that Webex does not collect users’ AAIDs, and that it does not share user data with third-party companies that own the SDKs embedded in Webex.

Notably, nine governments—Ghana, India, Indonesia, Iran, Iraq, Russia, Saudi Arabia, Sri Lanka, and Turkey—directly built and offered eleven learning apps that may collect AAID from children. In doing so, these governments granted themselves the ability to track an estimated 41.1 million students and teachers purely for advertising and monetization.

Some governments disclosed in their app’s privacy policy that the app collects students’ AAID for commercial purposes. Rumah Belajar, for example, is an EdTech website and app developed and operated by Indonesia’s Ministry of Education and Culture to provide online education to preschool, primary, and secondary school students during the pandemic.[57] Through Rumah Belajar’s privacy policy, the Indonesian government discloses that it automatically collects children’s “unique device identifiers” and “mobile device unique ID,” which may be used to “show advertisements to you,” “to advertise on third party websites to you after you visited our service,” and shared with third party “business partners” so that they can “offer you certain products, services or promotions.”[58]

Through dynamic analysis commissioned by Human Rights Watch and conducted by the Defensive Lab Agency, Human Rights Watch detected students’ AAID sent from Rumah Belajar to Google and to Facebook. Specifically, children’s AAID were sent to the Google-owned domain app-measurement.com, and to the Facebook-owned domain graph.facebook.com.[59]

Indonesia does not have a data protection law, or specific regulations that protect children’s data privacy. A draft data protection bill, introduced in January 2020 and pending further discussion in the House of Representatives as of September 2021, does not provide dedicated protections for children.[60]

In contrast, Eğitim Bilişim Ağı, developed by Turkey’s Ministry of National Education for preschool, primary, and secondary school students to continue learning during Covid-19 school closures, does not provide a privacy policy at all. Nor does the app provide a disclosure elsewhere on the product to notify students that their AAID is collected and sent to third-party companies for advertising purposes.[61]

Through dynamic analysis, Human Rights Watch detected students’ AAID transmitted from Eğitim Bilişim Ağı to Google via the Google-owned domains www.googleadservices.com and app-measurement.com. www.googleadservices.com is operated by Google Ads, the company’s online advertising platform. Google Ads uses the information it collects to understand a person’s interests and auctions off to the highest bidder the chance to show an ad to those in the advertiser’s target audience.[62]

Neither Indonesia’s Ministry of Education and Culture nor Turkey’s Ministry of National Education responded to Human Rights Watch’s requests for comment. Cisco informed Human Rights Watch that Webex does not collect AAIDs.

The collection of AAID from children is neither necessary nor proportionate to the purpose of providing them with education, and risks exposing children to rights abuses as discussed in Chapter 3.

Inescapable Surveillance

Human Rights Watch found 14 EdTech apps with access to either the Wi-Fi Media Access Control (MAC) address or the International Mobile Equipment Identity (IMEI) on children’s devices, two persistent identifiers that are so strong that a child or their parent cannot avoid or protect against their surveillance even if they take the extraordinary step of wiping their phones or performing a factory reset.

Eight apps granted themselves the ability to collect the Wi-Fi MAC address of a device’s networking hardware. Located in any device that can connect to the internet, this identifier is extremely persistent and cannot be changed by wiping the device clean with a factory reset. Any instance of an app collecting the Wi-Fi MAC address is notable; in 2015, Google banned developers from accessing the Wi-Fi MAC address over privacy concerns that it was being used by third-party tracking companies as a persistent identifier that could not realistically be changed by users.[63]

Recommended by 13 governments, these apps had the ability to collect the Wi-Fi MAC addresses of an estimated 15.6 billion users. Three of these apps appear to have the ability to do so from an estimated 610,000 children, as their own materials describe and appear to market them for children’s education.

|

App |

Country |

Apparently designed for use by children? |

Developer |

Estimated Users[64] |

|

Minecraft: Education Edition |

Australia: Victoria |

Yes |

Private |

500,000 |

|

YouTube |

India: Uttar Pradesh, Malaysia, Nigeria, United Kingdom: England |

No |

Private |

10,000,000,000 |

|

Padlet |

Colombia, Germany: Bavaria, Romania |

No |

Private |

5,000,000 |

|

LINE |

Japan, Taiwan |

No |

Private |

500,000,000 |

|

Muse |

Pakistan |

Yes |

Private |

10,000 |

|

KakaoTalk |

Republic of Korea |

No |

Private |

100,000,000 |

|

Extramarks |

South Africa |

Yes |

Private |

100,000 |

|

|

Taiwan |

No |

Private |

5,000,000,000 |

Eight apps were found with the ability to collect International Mobile Equipment Identity (IMEI) numbers. Used to connect to cellular networks and to trace stolen phones, every mobile device has an IMEI number baked into its hardware. An IMEI cannot be changed, and it is illegal to do so in some countries.[65] The only means of changing one’s IMEI is to throw the phone away and purchase a new one.

Recommended for children’s learning by 12 governments, these apps may have collected in the aggregate IMEI numbers from an estimated 5.6 billion users. Four of these apps are apparently designed exclusively for children, so they may collect IMEI numbers from an estimated 3.1 million children in Brazil, Indonesia, Pakistan, and South Africa.

|

App |

Country |

Apparently designed for use by children? |

Developer |

Estimated Users[66] |

|

Stoodi |

Brazil: São Paulo |

Yes |

Private |

1,000,000 |

|

Kelas Pintar |

Indonesia |

Yes |

Private |

1,000,000 |

|

LINE |

Japan, Taiwan |

No |

Private |

500,000,000 |

|

Taleemabad |

Pakistan |

Yes |

Private |

1,000,000 |

|

Telegram |

Russia |

No |

Private |

1,000,000,000 |

|

KakaoTalk |

Republic of Korea |

No |

Private |

100,000,000 |

|

Extramarks |

South Africa |

Yes |

Private |

100,000 |

|

|

Taiwan |

No |

Private |

5,000,000,000 |

Human Rights Watch found nine apps potentially engaging in ID bridging. When the AAID is collected and bundled alongside another persistent device identifier, the resulting “bridge” between the two is so powerful that it bypasses any privacy controls that the user may have set on their device to protect themselves. This allows companies to track users with an AAID that can never be reset, in effect creating an accurate advertising profile of a user that lasts in perpetuity.[67]

Given the risks that ID bridging poses to users’ privacy, Google’s own policies warn developers that the “advertising identifier may not be connected to persistent device identifiers (for example: SSAID, MAC address, IMEI, etc.) for any advertising purpose.”[68]

|

App |

Country |

Apparently designed for use by children? |

Potential ID bridging |

Developer |

Estimated Users[69] |

|

Minecraft: Education Edition |

Australia: Victoria |

Yes |

Wi-Fi MAC |

Private |

500,000 |

|

Stoodi |

Brazil: São Paulo |

Yes |

IMEI |

Private |

1,000,000 |

|

Padlet |

Germany: Bavaria, Romania, Colombia |

Yes |

Wi-Fi MAC |

Private |

1,000,000 |

|

Kelas Pintar |

Indonesia |

Yes |

IMEI |

Private |

1,000,000 |

|

Muse |

Pakistan |

Yes |

Wi-Fi MAC |

Private |

10,000 |

|

Taleemabad |

Pakistan |

Yes |

IMEI |

Private |

500,000 |

|

KakaoTalk |

Republic of Korea |

No |

Wi-Fi MAC, IMEI |

Private |

100,000,000 |

|

Extramarks |

South Africa |

Yes |

Wi-Fi MAC, IMEI |

Private |

100,000 |

|

|

Taiwan |

No |

Wi-Fi MAC, IMEI |

Private |

5,000,000,000 |

Muse, for example, was conclusively found to be engaging in ID bridging. Through dynamic analysis, Human Rights Watch observed Muse collecting and transmitting bridged ID data to Facebook through the Facebook-owned domain graph.facebook.com.

Of the 14 apps discovered to grant themselves access to their users’ Wi-Fi MAC or IMEI, 10 did not disclose this in their privacy policies. None of the 10 apps found to engage in ID bridging disclosed this practice to their users.

When reached for comment, Microsoft denied that its products engage in ID bridging, and Padlet responded that it did not intend to collect the data needed for ID bridging. In their responses, Facebook (Meta) and Muse did not answer whether their products engage in ID bridging. Kakao declined to respond to our request for comment; Extramarks, Kelas Pintar, Stoodi, and Taleemabad did not respond.6[70]

These practices are not necessary for EdTech apps to function or for the purpose of providing children’s education.

Websites: Canvas Fingerprinting

Of the many tracking technologies that websites can use to identify people and their behaviors online, one of the most invasive is canvas fingerprinting. Virtually impossible for users to block, this technique works by drawing hidden shapes and text on a user’s webpage. Because each computer draws these shapes slightly differently, these images can be used by marketers and others to assign a unique number to a user’s device, which is then used as a singular identifier to track the user’s activities across the internet.[71] Users cannot protect themselves by using standard web browser privacy settings or ad-blocking software.

Of the 124 EdTech websites examined by Human Rights Watch, eight websites were found “fingerprinting” their users and tracking them across the internet.

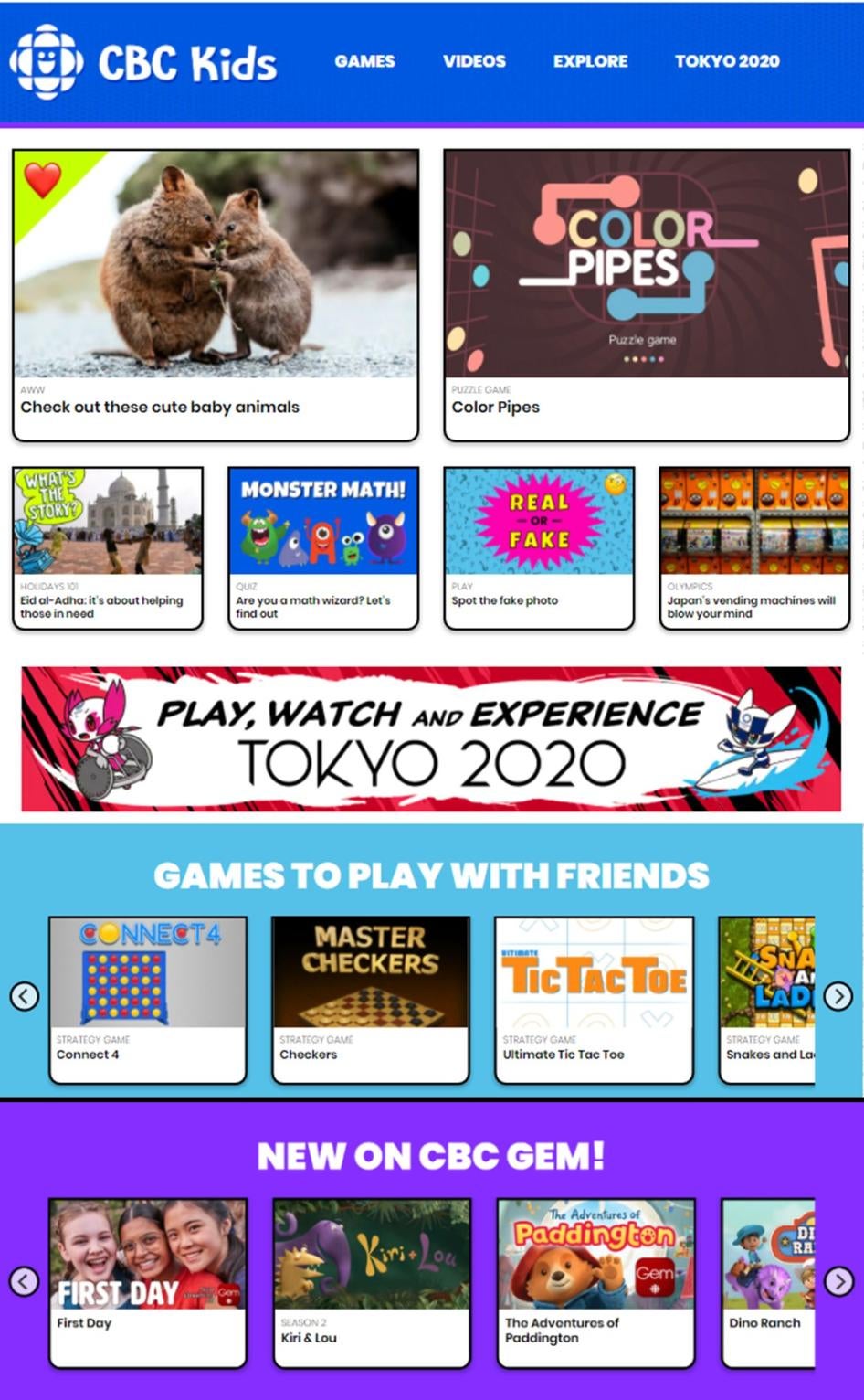

Notably, two of these websites are directly built and operated by government—Moscow Electronic School (Russia) and Digital Lessons (Russia)—for children’s educational use. Another website, CBC Kids (Canada), receives the majority of its funding from government.[72]

|

Website |

Country |

Apparently designed for use by children? |

Developer |

Canvas fingerprinting script loaded from: |

|

CBC Kids |

Canada: Quebec |

Yes |

Government |

https://gem.cbc.ca/akam/11/4c588f3 https://www.cbc.ca/akam/11/b62e49a |

|

WorkFlowy |

Colombia |

No |

Private |

https://workflowy.com/media/js/82cab8d21714ada491b4.js https://workflowy.com/media/js/auth_embed.min.js |

|

Top Parent |

India: Uttar Pradesh |

Yes |

Private |

https://cdnjs.cloudflare.com/ajax/libs/fingerprintjs2/2.1.0/fingerprint2.min.js |

|

WeSchool |

Italy |

Yes |

Private |

https://m.stripe.network/out-4.5.35.js |

|

Z-kai |

Japan |

Yes |

Private |

https://spider.af/t/k5lcn2yw?s=01&o=9vd5xkmg7be&a=1623564108947&u= https://spider.af/t/k5lcn2yw?s=01& |

|

iMektep |

Kazakhstan |

Yes |

Private |

https://st.vk.com/js/cmodules/mobile |

|

Moscow Electronic School |

Russia |

Yes |

Government |

https://stats.mos.ru/ss2.min.js |

|

Digital Lessons |

Russia |

Yes |

Government |

https://st.vk.com/js/cmodules/mobile |

One EdTech website, Z-kai, was endorsed by the Japanese Education Ministry for all elementary, middle, and high school students to learn core subjects during Covid-19 school closures.[73] Human Rights Watch observed Z-kai fingerprinting children in Japan by secretly drawing this image on their web browsers:

Two such canvas fingerprinting scripts were built and loaded on the Z-kai site by spider.af, a Japanese company that specializes in ensuring that advertisers’ intended audiences see their ads.[74]

Z-kai and spider.af did not respond to our request for comment.

It is not possible to determine the intent behind the use of canvas fingerprinting and how it is used by the product it is embedded in. However, none of these eight websites disclosed their use of canvas fingerprinting to their users. In doing so, these companies effectively kept their users in the dark that they were being invisibly identified and followed around the internet by tracking technology that is difficult to avoid or protect against.

This technique is neither proportionate nor necessary for these websites to function or deliver educational content to children. Its use on children in an educational setting infringes upon children’s right to privacy.

Tracking Where Children Are

Just thinking about my whole age group, the amount of data they share is not even funny. Our everyday lives, our locations. So, their whole lives must be in danger if their data is getting sold off. It’s really scary.

—Priyanka S., 16, Uttar Pradesh, India[75]

To know where a child is, and when, is to possess information so sensitive that some governments provide special protections against its misuse and the risks of “abduction, physical and mental abuse, sexual abuse and trafficking.”[76]

Information about a child’s physical location also reveals powerfully intimate details about their life far beyond their coordinates. Mobile phones have the ability to find and track a child’s precise physical location over time, including when and how long they were in any given place. Once collected, these data points can reveal such sensitive information as where a child lives and where they go to school, trips between divorced parents’ homes, and visits to a doctor’s office specializing in childhood cancer.

Even without names or other obviously identifiable information attached to location data, it is startlingly easy to identify real children and people without their awareness or consent. A New York Times investigation determined that just two precise location data points is enough to identify a person; journalists were, for example, able to identify a single child and where they live by tracing their daily route from home to school, as well as a middle-school math teacher by her classroom and her doctor’s office.[77]

At a time when many children were remotely learning from home under Covid-19 lockdowns, the surveillance of their physical presence through location data likely revealed addresses and places most significant to them.