There’s plenty of evidence that human judgment can be clouded by human emotions, prejudices, or even low blood sugar—and that this can have dire consequences for respect of human rights.

For centuries, law has taken this into account, posing standards such as the “reasonable” man (or woman), or making allowances for diminished capacity. Research into cognitive bias is booming.

For this reason, many cheer our increasing reliance on big data and algorithms to aid or even replace predictive human decision-making. Machine learning tools may discern and learn from patterns in massive data that the human mind cannot process. Perhaps safer self-driving cars, more accurate medical diagnoses, or even better military strategy will result, and this could save many lives.

But this superior computational power could come at profound cost in the years ahead by causing us to lose faith in our own ability to discern the truth and assign responsibility for bad decisions. Without someone to hold accountable, it is nearly impossible to vindicate human rights.

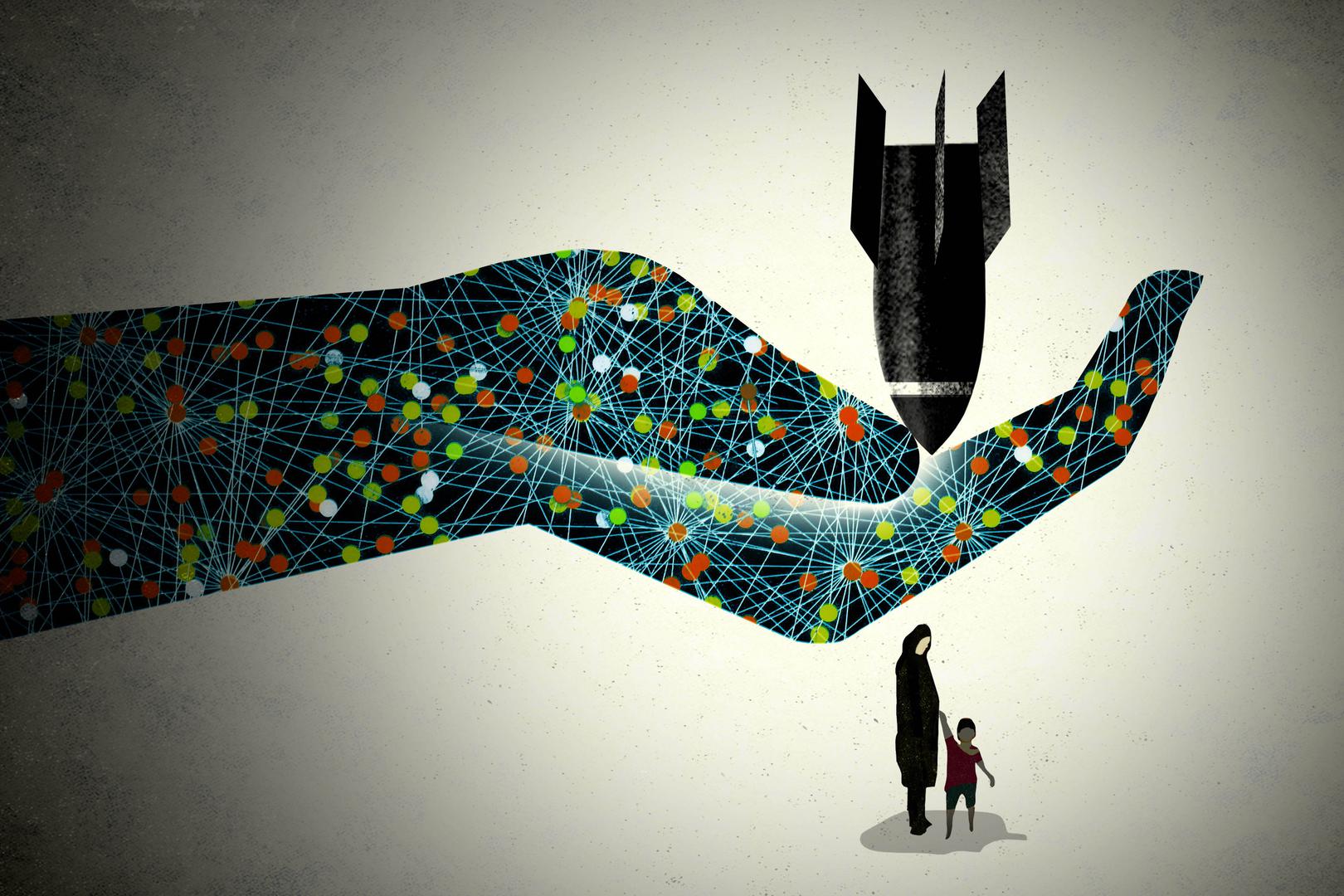

There is already widespread opposition to developing fully autonomous weapons. These weapons would select and fire on targets without meaningful human control. Even those who believe that one day these “killer robots” could do better minimizing civilian casualties than humans – itself a highly controversial proposition – might recoil at leaving the ultimate decision to unaccountable algorithms. Try explaining to victims of a machine-learning massacre that this was a product design problem, not a war crime.

Another problem is the political reflex of demanding that the tech wizards of social media “fix” problems like fake news or hate speech. Corporations regulate for clicks and cash, not the subtle balance between promoting free expression in democratic societies and protecting the rights of others. They will use algorithms, not well-trained jurists, to determine our rights, and even if they could explain how their tools make decisions, they would be loath to expose proprietary secrets.

Transparency may mitigate some problems of bias—if we could test the algorithm, or audit the data it learns from, corrections might be possible. Data comes with our world’s prejudice, poverty and unfairness baked in.Biased or incomplete data can reinforce discriminatory decision-making, as has been evident in tools that purport to predict crime, recidivism, or credit-worthiness.

But transparency, even where achievable, can’t solve all problems. The sentencing decisions of a well-trained algorithm may be more “rational” than those of a hungry, angry, or prejudiced judge.Yet it offends human dignity to absolve the judge from responsibility for depriving another of liberty for years. The act of truly hearing an accused, and engaging compassion, empathy, and one’s lived human experience are as essential as calculation of risk to producing fair judgment, and upholding human dignity. Sometimes the solutions to human error require improving human judgment and responsibility, not encouraging its abdication or atrophy.

Machine learning tools, like any technology, are a means, not an end. Where they are put to good purpose, they can advance accountability and rights.We should insist these tools be always under human control, and applied only where they will enhance human rights, remedies for violations, and human responsibility. That’s a policy choice, and one our coming Robot Masters cannot make.