US law enforcement agencies’ use of Rekognition, an Amazon surveillance system that uses facial recognition powered by artificial intelligence, poses threats to the rights to peaceful assembly and respect for private life, among others.

Human Rights Watch and other organizations have pointed this out in a letter to Amazon expressing strong concerns about the system. The American Civil Liberties Union of Northern California obtained documents showing the system was marketed to police departments, and highlighted its potential dangers to rights.

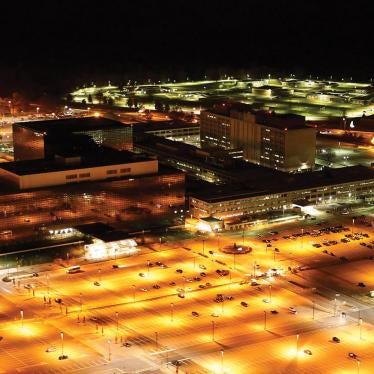

We should all be asking exactly how this technology is being used by police in Orlando, Florida, and Washington County, Oregon, as well as how it could be used elsewhere. (Motorola, which sells equipment to law enforcement, also has access.)

But this is only part of the puzzle. People in the US also need to know whether law enforcement agents have been disclosing uses of this technology for criminal investigations to judges and defendants – because if they aren’t doing so, this would prevent courts from evaluating the system’s legality and accuracy.

Human Rights Watch recently published a detailed investigation of “parallel construction,” a practice in which the government conceals its use of a particular investigative technique (such as surveillance) from defendants and judges by deliberately creating an alternative explanation for how a piece of information was found. One of the risks Human Rights Watch identified was that, thanks to this practice, judges might never decide whether new technologies are constitutional and sufficiently minimize any impact on rights.

A bill introduced in Illinois throws the potential consequences of the secret use of technologies such as Rekognition into high relief: If passed, the legislation could empower police to surveil peaceful protesters using drones equipped with facial recognition software. There is always a risk that biased or inadequately trained officers anywhere could single out protesters for investigations simply because they exercised their free-expression rights, and an airborne facial recognition tool could facilitate such conduct.

Concerns over technology like Rekognition are not limited to law enforcement uses. For example, such technology could be employed in the development of fully autonomous weapons (also known as “killer robots”) – which Human Rights Watch is campaigning to outlaw.

US lawmakers and courts need to make sure all government uses of systems relying on biometric data or artificial intelligence respect rights. But they can only do that if they – and we – know about those techniques in the first place.