Summary

In cases not covered by this Protocol or by other international agreements, civilians and combatants remain under the protection and authority of the principles of international law derived from established custom, from the principles of humanity and from the dictates of public conscience.

— Martens Clause, as stated in Additional Protocol I of 1977 to the Geneva Conventions

Fully autonomous weapons are one of the most alarming military technologies under development today. As such there is an urgent need for states, experts, and the general public to examine these weapons closely under the Martens Clause, the unique provision of international humanitarian law that establishes a baseline of protection for civilians and combatants when no specific treaty law on a topic exists. This report shows how fully autonomous weapons, which would be able to select and engage targets without meaningful human control, would contravene both prongs of the Martens Clause: the principles of humanity and the dictates of public conscience. To comply with the Martens Clause, states should adopt a preemptive ban on the weapons’ development, production, and use.

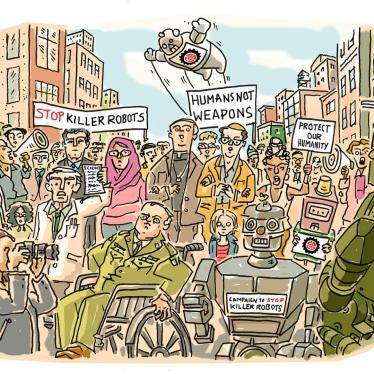

The rapid development of autonomous technology and artificial intelligence (AI) means that fully autonomous weapons could become a reality in the foreseeable future. Also known as “killer robots” and lethal autonomous weapons systems, they raise a host of moral, legal, accountability, operational, technical, and security concerns. These weapons have been the subject of international debate since 2013. In that year, the Campaign to Stop Killer Robots, a civil society coalition, was launched and began pushing states to discuss the weapons. After holding three informal meetings of experts, states parties to the Convention on Conventional Weapons (CCW) began formal talks on the topic in 2017. In August 2018, approximately 80 states will convene again for the next meeting of the CCW Group of Governmental Experts.

As CCW states parties assess fully autonomous weapons and the way forward, the Martens Clause should be a central element of the discussions. The clause, which is a common feature of international humanitarian law and disarmament treaties, declares that in the absence of an international agreement, established custom, the principles of humanity, and the dictates of public conscience should provide protection for civilians and combatants. The clause applies to fully autonomous weapons because they are not specifically addressed by international law. Experts differ on the precise legal significance of the Martens Clause, that is, whether it reiterates customary law, amounts to an independent source of law, or serves as an interpretive tool. At a minimum, however, the Martens Clause provides key factors for states to consider as they evaluate emerging weapons technology, including fully autonomous weapons. It creates a moral standard against which to judge these weapons.

The Principles of Humanity

Due to their lack of emotion and legal and ethical judgment, fully autonomous weapons would face significant obstacles in complying with the principles of humanity. Those principles require the humane treatment of others and respect for human life and human dignity. Humans are motivated to treat each other humanely because they feel compassion and empathy for their fellow humans. Legal and ethical judgment gives people the means to minimize harm; it enables them to make considered decisions based on an understanding of a particular context. As machines, fully autonomous weapons would not be sentient beings capable of feeling compassion. Rather than exercising judgment, such weapons systems would base their actions on pre-programmed algorithms, which do not work well in complex and unpredictable situations.

Showing respect for human life entails minimizing killing. Legal and ethical judgment helps humans weigh different factors to prevent arbitrary and unjustified loss of life in armed conflict and beyond. It would be difficult to recreate such judgment, developed over both human history and an individual life, in fully autonomous weapons, and they could not be pre-programmed to deal with every possible scenario in accordance with accepted legal and ethical norms. Furthermore, most humans possess an innate resistance to killing that is based on their understanding of the impact of loss of life, which fully autonomous weapons, as inanimate machines, could not share.

Even if fully autonomous weapons could adequately protect human life, they would be incapable of respecting human dignity. Unlike humans, these robots would be unable to appreciate fully the value of a human life and the significance of its loss. They would make life-and-death decisions based on algorithms, reducing their human targets to objects. Fully autonomous weapons would thus violate the principles of humanity on all fronts.

The Dictates of Public Conscience

Increasing outrage at the prospect of fully autonomous weapons suggests that this new technology also runs counter to the second prong of the Martens Clause, the dictates of public conscience. These dictates consist of moral guidelines based on a knowledge of what is right and wrong. They can be ascertained through the opinions of the public and of governments.

Many individuals, experts, and governments have objected strongly to the development of fully autonomous weapons. The majority of respondents in multiple public opinion surveys have registered opposition to these weapons. Experts, who have considered the issue in more depth, have issued open letters and statements that reflect conscience even better than surveys do. International organizations and nongovernmental organizations (NGOs), along with leaders in disarmament and human rights, peace and religion, science and technology, and industry, have felt compelled, particularly on moral grounds, to call for a ban on fully autonomous weapons. They have condemned these weapons as “unconscionable,” “abhorrent … to the sacredness of life,” “unwise,” and “unethical.”

Governments have cited compliance with the Martens Clause and moral shortcomings among their major concerns with fully autonomous weapons. As of July 2018, 26 states supported a preemptive ban, and more than 100 states had called for a legally binding instrument to address concerns raised by lethal autonomous weapons systems. Almost every CCW state party that spoke at their last meeting in April 2018 stressed the need to maintain human control over the use of force. The emerging consensus for preserving meaningful human control, which is effectively equivalent to a ban on weapons that lack such control, shows that the public conscience is strongly against fully autonomous weapons.

The Need for a Preemptive Ban Treaty

An assessment of fully autonomous weapons under the Martens Clause underscores the need for new law that is both specific and strong. Regulations that allowed for the existence of fully autonomous weapons would not suffice. For example, limiting use to certain locations would neither prevent the risk of proliferation to actors with little regard for humane treatment or human life, nor ensure respect for the dignity of civilians or combatants. Furthermore, the public conscience reveals widespread support for a ban on fully autonomous weapons, or its equivalent, a requirement for meaningful human control. To ensure compliance with both the principles of humanity and the dictates of public conscience, states should therefore preemptively prohibit the development, production, and use of fully autonomous weapons.

Recommendations

To avert the legal, moral, and other risks posed by fully autonomous weapons and the loss of meaningful human control over the selection and engagement of targets, Human Rights Watch and Harvard Law School’s International Human Rights Clinic (IHRC) recommend:

To CCW states parties

- Adopt, at their annual meeting in November 2018, a mandate to negotiate a new protocol prohibiting fully autonomous weapons systems, or lethal autonomous weapons systems, with a view to concluding negotiations by the end of 2019.

- Use the intervening Group of Governmental Experts meeting in August 2018 to present clear national positions and to reach agreement on the need to adopt a negotiating mandate at the November annual meeting.

- Develop national positions and adopt national prohibitions as key building blocks for an international ban.

- Express opposition to fully autonomous weapons, including on the legal and moral grounds reflected in the Martens Clause, in order further to develop the existing public conscience.

To experts in the private sector

- Oppose the removal of meaningful human control from weapons systems and the use of force.

- Publicly express explicit support for the call to ban fully autonomous weapons, including on the legal and moral grounds reflected in the Martens Clause, and urge governments to start negotiating new international law.

- Commit not to design or develop AI for use in the development of fully autonomous weapons via codes of conduct, statements of principles, and other measures that ensure the private sector does not advance the development, production, or use of fully autonomous weapons.

I. Background on Fully Autonomous Weapons

Fully autonomous weapons would be able to select and engage targets without meaningful human control. They represent an unacceptable step beyond existing armed drones because a human would not make the final decision about the use of force in individual attacks. Fully autonomous weapons, also known as lethal autonomous weapons systems and “killer robots,” do not exist yet, but they are under development, and military investments in autonomous technology are increasing at an alarming rate.

The risks of fully autonomous weapons outweigh their purported benefits. Proponents highlight that the new technology could save the lives of soldiers, process data and operate at greater speeds than traditional systems, and be immune to fear and anger, which can lead to civilian casualties. Fully autonomous weapons, however, raise a host of serious concerns, many of which Human Rights Watch has highlighted in previous publications. First, delegating life-and-death decisions to machines crosses a moral red line. Second, fully autonomous weapons would face significant challenges complying with international humanitarian and human rights law. Third, they would create an accountability gap because it would be difficult to hold anyone responsible for the unforeseen harm caused by an autonomous robot. Fourth, fully autonomous weapons would be vulnerable to spoofing and hacking. Fifth, these weapons would threaten global security because they could lead to an arms race, proliferate to actors with little respect for international law, and lower the threshold to war.[1]

This report focuses on yet another concern, which straddles law and morality—that is, the likelihood that fully autonomous weapons would contravene the Martens Clause. This provision of international humanitarian law requires states to take into account the principles of humanity and dictates of public conscience when examining emerging weapons technology. A common feature in the Geneva Conventions and disarmament treaties, the clause represents a legal obligation on states to consider moral issues.

The plethora of problems presented by fully autonomous weapons, including those under the Martens Clause, demand urgent action. A handful of states have proposed a wait-and-see approach, given that it is unclear what technology will be able to achieve. The high stakes involved, however, point to the need for a precautionary approach. Scientific uncertainty should not stand in the way of action to prevent what some scientists have referred to as the “third revolution in warfare, after gunpowder and nuclear arms.”[2] Countries should adopt a preemptive ban on the development, production, and use of fully autonomous weapons.

II. History of the Martens Clause

While the Martens Clause originated in a diplomatic compromise, it has served humanitarian ends. It states that in the absence of specific treaty law, established custom, the principles of humanity, and the dictates of public conscience provide protection for civilians and combatants. Since its introduction, the Martens Clause has become a common feature of the core instruments of international humanitarian law. The clause also appears in numerous disarmament treaties. The protections the Martens Clause provides and the legal recognition it has received highlight its value for examining emerging weapons systems that could cause humanitarian harm on the battlefield and beyond.

Origins of the Martens Clause

The Martens Clause first appeared in the preamble of the 1899 Hague Convention II containing the Regulations on the Laws and Customs of War on Land. In that iteration, the Martens Clause reads:

Until a more complete code of the laws of war is issued, the High Contracting Parties think it right to declare that in cases not included in the Regulations adopted by them, populations and belligerents remain under the protection and empire of the principles of international law, as they result from the usages established between civilized nations, from the laws of humanity, and the requirements of the public conscience.[3]

The clause thus provides a baseline level of protection to civilians and combatants when specific law does not exist.

Russian diplomat and jurist Fyodor Fyodorovich Martens proposed the Martens Clause as a way to break a negotiating stalemate at the 1899 Hague Peace Conference, which had been convened to adopt rules restraining war, reduce arms spending, and promote peace.[4] The great powers and lesser powers disagreed about how much authority occupying forces could exercise over the local population. The great powers insisted on a new treaty clarifying the rights and obligations of occupying forces, while the lesser powers opposed codifying provisions of an earlier political declaration that they believed did not adequately protect civilians. The Martens Clause provided fighters against foreign occupation the option of arguing that if specific provisions of the treaty did not cover them, they were entitled to at least such protection offered by principles of international law derived from custom, “the laws of humanity,” and “the requirements of the public conscience.”[5]

Modern Use of the Martens Clause

In the nearly 120 years since the adoption of the 1899 Hague Convention, the Martens Clause has been applied more broadly and become a staple of efforts to extend humanitarian protections during armed conflict. Seeking to reduce the impact of hostilities, numerous instruments of international humanitarian law and disarmament law have incorporated the provision.

Geneva Conventions and Additional Protocol I

When drafting the 1949 Geneva Conventions, the cornerstones of international humanitarian law,[6] negotiators wanted to ensure that certain protections would continue if a state party decided to withdraw from any of the treaties. The four Geneva Conventions contain the Martens Clause in their articles on denunciation, which address the implications of a state of leaving the treaties.[7] In its authoritative commentary on the conventions, the International Committee of the Red Cross (ICRC), the arbiter of international humanitarian law, explains:

[I]f a High Contracting Party were to denounce one of the Geneva Conventions, it would continue to be bound not only by other treaties to which it remains a Party, but also by other rules of international law, such as customary law. An argumentum e contrario, suggesting a legal void following the denunciation of a Convention, is therefore impossible.[8]

Additional Protocol I, which was adopted in 1977, expands the protections afforded to civilians by the Fourth Geneva Convention.[9] The protocol contains the modern iteration of the Martens Clause, and the version used in this report:

In cases not covered by this Protocol or by other international agreements, civilians and combatants remain under the protection and authority of the principles of international law derived from established custom, from the principles of humanity and from the dictates of public conscience.[10]

By incorporating this language in its article on “General Principles and Scope of Application,” rather than confining it to a provision on denunciation, Additional Protocol I extends the application of the Martens Clause. According to the ICRC commentary:

There were two reasons why it was considered useful to include this clause yet again in the Protocol. First ... it is not possible for any codification to be complete at any given moment; thus, the Martens clause prevents the assumption that anything which is not explicitly prohibited by the relevant treaties is therefore permitted. Secondly, it should be seen as a dynamic factor proclaiming the applicability of the principles mentioned regardless of subsequent developments of types of situation or technology.[11]

The Martens Clause thus covers gaps in existing law and promotes civilian protection in the face of new situations or technology.

Disarmament Treaties

Since 1925, most treaties containing prohibitions on weapons also include the Martens Clause.[12] The clause is referenced in various forms in the preambles of the 1925 Geneva Gas Protocol,[13] 1972 Biological Weapons Convention,[14] 1980 Convention on Conventional Weapons,[15] 1997 Mine Ban Treaty,[16] 2008 Convention of Cluster Munitions,[17] and 2017 Treaty on the Prohibition of Nuclear Weapons.[18] Although a preamble does not establish binding rules, it can inform interpretation of a treaty and is typically used to incorporate, by reference, the context of already existing law. The inclusion of the Martens Clause indicates that if a treaty’s operative provisions present gaps, they should be filled by established custom, the principles of humanity, and the dictates of public conscience. By incorporating the Martens Clause into this line of disarmament treaties, states have reaffirmed its importance to international humanitarian law generally and weapons law specifically.

The widespread use of the Martens Clause makes it relevant to the current discussions of fully autonomous weapons. The clause provides a standard for ensuring that civilians and combatants receive at least minimum protections from such problematic weapons. In addition, most of the diplomatic discussions of fully autonomous weapons have taken place under the auspices of the CCW, which includes the Martens Clause in its preamble. Therefore, an evaluation of fully autonomous weapons under the Martens Clause should play a key role in the deliberations about a new CCW protocol.

III. Applicability and Significance of the Martens Clause

The Martens Clause applies in the absence of specific law on a topic. Experts disagree on its legal significance, but at a minimum, it provides factors that states must consider when examining new challenges raised by emerging technologies. Its importance to disarmament law in particular is evident in the negotiations that led to the adoption of a preemptive ban on blinding lasers. States and others should therefore take the clause into account when discussing the legality of fully autonomous weapons and how best to address them.

Applicability of the Martens Clause

The Martens Clause, as set out in Additional Protocol I, applies “[i]n cases not covered” by the protocol or by other international agreements.[19] No matter how careful they are, treaty drafters cannot foresee and encompass all circumstances in one instrument. The Martens Clause serves as a stopgap measure to ensure that an unanticipated situation or emerging technology does not subvert the overall purpose of humanitarian law merely because no existing treaty provision explicitly covers it.[20]

The Martens Clause is triggered when existing treaty law does not specifically address a certain circumstance. As the US Military Tribunal at Nuremberg explained, the clause makes “the usages established among civilized nations, the laws of humanity and the dictates of public conscience into the legal yardstick to be applied if and when the specific provisions of [existing law] do not cover specific cases occurring in warfare.”[21] It is particularly relevant to new technology that drafters of existing law may not have predicted. Emphasizing that the clause’s “continuing existence and applicability is not to be doubted,” the International Court of Justice highlighted that it has “proved to be an effective means of addressing the rapid evolution of military technology.”[22] Given that there is often a dearth of law in this area, the Martens Clause provides a standard for emerging weapons.

As a rapidly developing form of technology, fully autonomous weapons exemplify an appropriate subject for the Martens Clause. Existing international humanitarian law applies to fully autonomous weapons only in general terms. It requires that all weapons comply with the core principles of distinction and proportionality, but it does not contain specific rules for dealing with fully autonomous weapons.[23] Drafters of the Geneva Conventions could not have envisioned the prospect of a robot that could make independent determinations about when to use force without meaningful human control. Given that fully autonomous weapons present a case not covered by existing law, they should be evaluated under the principles articulated in the Martens Clause.

Legal Significance of the Martens Clause

Interpretations of the legal significance of the Martens Clause vary.[24] Some experts adopt a narrow perspective, asserting that the Martens Clause serves merely as a reminder that if a treaty does not expressly prohibit a specific action, the action is not automatically permitted. In other words, states should refer to customary international law when treaty law is silent on a specific issue.[25] This view is arguably unsatisfactory, however, because it addresses only one aspect of the clause—established custom—and fails to account for the role of the principles of humanity and the dictates of public conscience. Under well-accepted rules of legal interpretation, a clause should be read to give each of its terms meaning.[26] Treating the principles of humanity and the dictates of public conscience as simply elements of established custom would make them redundant and violate this rule.

Others argue that the Martens Clause is itself a unique source of law.[27] They contend that plain language of the Martens Clause elevates the principles of humanity and the dictates of public conscience to independent legal standards against which to judge unanticipated situations and emerging forms of military technology.[28] On this basis, a situation or weapon that conflicts with either standard is per se unlawful.

Public international law jurist Antonio Cassese adopted a middle approach, treating the principles of humanity and dictates of public conscience as “fundamental guidance” for the interpretation of international law.[29] Cassese wrote that “[i]n case of doubt, international rules, in particular rules belonging to humanitarian law, must be construed so as to be consonant with general standards of humanity and the demands of public conscience.”[30] International law should, therefore, be understood not to condone situations or technologies that raise concerns under these prongs of the Martens Clause.

At a minimum, the Martens Clause provides factors for states to consider as they approach emerging weapons technology, including fully autonomous weapons. In 2018, the ICRC acknowledged the “debate over whether the Martens Clause constitutes a legally-binding yardstick against which the lawfulness of a weapon must be measured, or rather an ethical guideline.”[31] It concluded, however, that “it is clear that considerations of humanity and public conscience have driven the evolution of international law on weapons, and these notions have triggered the negotiation of specific treaties to prohibit or limit certain weapons.”[32] If concerns about a weapon arise under the principles of humanity or dictates of public conscience, adopting new, more specific law that eliminates doubts about the legality of a weapon can increase protections for civilians and combatants.

The Martens Clause also makes moral considerations legally relevant. It is codified in international treaties, yet it requires evaluating a situation or technology according to the principles of humanity and dictates of public conscience, both of which incorporate elements of morality. Peter Asaro, a philosopher of science and technology, writes that the Martens Clause invites “moral reflection on the role of the principles of humanity and the dictates of public conscience in articulating and establishing new [international humanitarian law].”[33] While a moral assessment of fully autonomous weapons is important in its own right, the Martens Clause also makes it a legal requirement in the absence of specific law.

Precedent of the Preemptive Ban on Blinding Lasers

States, international organizations, and civil society have invoked the Martens Clause in previous deliberations about unregulated, emerging technology.[34] They found it especially applicable to discussions of blinding lasers in the 1990s. These groups explicitly and implicitly referred to elements of the Martens Clause as justification for preemptively banning blinding lasers. CCW Protocol IV, adopted in 1995, codifies the prohibition.[35]

During a roundtable convened by the ICRC in 1991, experts highlighted the relevance of the Martens Clause. ICRC lawyer Louise Doswald-Beck argued that “[d]ecisions to impose specific restrictions on the use of certain weapon may be based on policy considerations,” and “that the criteria enshrined in the Martens clause [should] be particularly taken into account.”[36] Another participant said that “the Martens clause particularly addresses the problem of human suffering so that the ‘public conscience’ refers to what is seen as inhumane or socially unacceptable.”[37]

Critics of blinding lasers spoke in terms that demonstrated the weapons raised concerns under the principles of humanity and dictates of public conscience. Several speakers at the ICRC-convened meetings concurred that “weapons designed to blind are … socially unacceptable.”[38] ICRC itself “appealed to the ‘conscience of humanity’” in advocating for a prohibition.[39] At the CCW’s First Review Conference, representatives of UN agencies and civil society described blinding lasers as “inhumane,”[40] “abhorrent to the conscience of humanity,”[41] and “unacceptable in the modern world.”[42] A particularly effective ICRC public awareness campaign used photographs of soldiers blinded by poison gas during World War I to emphasize the fact that permanently blinding soldiers is cruel and inhumane.

Such characterizations of blinding lasers were linked to the need for a preemptive ban. For example, during debate at the First Review Conference, Chile expressed its hope that the body “would be able to establish guidelines for preventative action to prohibit the development of inhumane technologies and thereby to avoid the need to remedy the misery they might cause.”[43] In a December 1995 resolution urging states to ratify Protocol IV, the European Parliament declared that “deliberate blinding as a method of warfare is abhorrent.”[44] Using the language of the Martens Clause, the European Parliament stated that “deliberate blinding as a method of warfare is … in contravention of established custom, the principles of humanity and the dictates of the public conscience.”[45] The ICRC welcomed Protocol IV as a “victory of civilization over barbarity.”[46]

The discussions surrounding CCW Protocol IV underscore the relevance of the Martens Clause to the current debate about fully autonomous weapons. They show that CCW states parties have a history of applying the Martens Clause to controversial weapons. They also demonstrate the willingness of these states to preemptively ban a weapon that they find counter to the principles of humanity and dictates of public conscience. As will be discussed in more depth below, fully autonomous weapons raise significant concerns under the Martens Clause. The fact that their impact on armed conflict would be exponentially greater than that of blinding lasers should only increase the urgency of filling the gap in international law and explicitly banning them.[47]

IV. The Principles of Humanity

The Martens Clause divides the principles of international law into established custom, the principles of humanity, and the dictates of public conscience. Given that customary law is applicable even without the clause, this report assesses fully autonomous weapons under the latter two elements. The Martens Clause does not define these terms, but they have been the subject of much scholarly and legal discussion.

The relevant literature illuminates two key components of the principles of humanity. Actors are required: (1) to treat others humanely, and (2) to show respect for human life and dignity. Due to their lack of emotion and judgment, fully autonomous weapons would face significant difficulties in complying with either.

Humane Treatment

Definition

The first principle of humanity requires the humane treatment of others. The Oxford Dictionary defines “humanity” as “the quality of being humane; benevolence.”[48] The obligation to treat others humanely is a key component of international humanitarian law and international human rights law.[49] It appears, for example, in common Article 3 and other provisions of the Geneva Conventions, numerous military manuals, international case law, and the International Covenant on Civil and Political Rights.[50] Going beyond these sources, the Martens Clause establishes that human beings must be treated humanely, even when specific law does not exist.[51]

In order to treat other human beings humanely, one must exercise compassion and make legal and ethical judgments.[52] Compassion, according to the ICRC’s fundamental principles, is the “stirring of the soul which makes one responsive to the distress of others.”[53] To show compassion, an actor must be able to experience empathy—that is, to understand and share the feelings of another—and be compelled to act in response.[54] This emotional capacity is vital in situations when determinations about the use of force are made.[55] It drives actors to make conscious efforts to minimize the physical or psychological harm they inflict on human beings. Acting with compassion builds on the premise that “capture is preferable to wounding an enemy, and wounding him better than killing him; that non-combatants shall be spared as far as possible; that wounds inflicted be light as possible, so that the injured can be treated and cured; and that the wounds cause the least possible pain.”[56]

While compassion provides a motivation to act humanely, legal and ethical judgment provides a means to do so. To act humanely, an actor must make considered decisions as to how to minimize harm.[57] Such decisions are based on the ability to perceive and understand one’s environment and to apply “common sense and world knowledge” to a specific circumstance.[58] Philosophy professor James Moor notes that actors must possess the capacity to “identify and process ethical information about a variety of situations and make sensitive determinations about what should be done in those situations.”[59] In this way, legal and ethical judgment helps an actor weigh relevant factors to ensure treatment meets the standards demanded by compassion. Judgment is vital to minimizing suffering: one can only refrain from harming humans if one both recognizes the possible harms and knows how to respond.[60]

Application to Fully Autonomous Weapons

Fully autonomous weapons would face significant challenges in complying with the principle of humane treatment because compassion and legal and ethical judgment are human characteristics. Empathy, and the compassion for others that it engenders, come naturally to human beings. Most humans have experienced physical or psychological pain, which drives them to avoid inflicting unnecessary suffering on others. Their feelings transcend national and other divides. As the ICRC notes, “feelings and gestures of solidarity, compassion, and selflessness are to be found in all cultures.”[61] People’s shared understanding of pain and suffering leads them to show compassion towards fellow human beings and inspires reciprocity that is, in the words of the ICRC, “perfectly natural.”[62]

Regardless of the sophistication of a fully autonomous weapon, it could not experience emotions.[63] There are some advantages associated with being impervious to emotions such as anger and fear, but a robot’s inability to feel empathy and compassion would severely limit its ability to treat others humanely. Because they would not be sentient beings, fully autonomous weapons could not know physical or psychological suffering. As a result, they would lack the shared experiences and understandings that cause humans to relate empathetically to the pain of others, have their “souls stirred,” and be driven to exercise compassion towards other human beings. Amanda Sharkey, a professor of computer science, has written that “current robots, lacking living bodies, cannot feel pain, or even care about themselves, let alone extend that concern to others. How can they empathize with a human’s pain or distress if they are unable to experience either emotion?”[64] Fully autonomous weapons would therefore face considerable difficulties in guaranteeing their acts are humane and in compliance with the principles of humanity.

Robots would also not possess the legal and ethical judgment necessary to minimize harm on a case-by-case basis.[65] Situations involving use of force, particularly in armed conflict, are often complex and unpredictable and can change quickly. Fully autonomous weapons would therefore encounter significant obstacles to making appropriate decisions regarding humane treatment. After examining numerous studies in which researchers attempted to program ethics into robots, Sharkey found that robots exhibiting behavior that could be described as “ethical” or “minimally ethical” could operate only in constrained environments. Sharkey concluded that robots have limited moral capabilities and therefore should not be used in circumstances that “demand moral competence and an understanding of the surrounding social situation.”[66] Complying with international law frequently requires subjective decision-making in complex situations. Fully autonomous weapons would have limited ability to interpret the nuances of human behavior, understand the political, socioeconomic, and environmental dynamics of the situation, and comprehend the humanitarian risks of the use of force in a particular context.[67] These limitations would compromise the weapons’ ability to ensure the humane treatment of civilians and combatants and comply with the first principle of humanity.

Respect for Human Life and Dignity

Definition

A second principle of humanity requires actors to respect both human life and human dignity. Christof Heyns, former special rapporteur on extrajudicial, summary or arbitrary executions, highlighted these related but distinct concepts when he posed two questions regarding fully autonomous weapons: “[C]an [they] do or enable proper targeting?” and “Even if they can do proper targeting, should machines hold the power of life and death over humans?”[68] The first considers whether a weapon can comply with international law’s rules on protecting life. The second addresses the “manner of targeting” and whether it respects human dignity.[69]

In order to respect human life, actors must take steps to minimize killing.[70] The right to life states that “[n]o one shall be arbitrarily deprived of his life.”[71] It limits the use of lethal force to circumstances in which it is absolutely necessary to protect human life, constitutes a last resort, and is applied in a manner proportionate to the threat.[72] Codified in Article 6 of the International Covenant on Civil and Political Rights, the right to life has been recognized as the “supreme right” of international human rights law, which applies under all circumstances. During times of armed conflict, international humanitarian law determines what constitutes arbitrary or unjustified deprivation of life. It requires that actors comply with the rules of distinction, proportionality, and military necessity in situations of armed conflict.[73]

Judgment and emotion promote respect for life because they can serve as checks on killing. The ability to make legal and ethical judgments can help an actor determine which course of action will best protect human life in the infinite number of potential unforeseen scenarios. An instinctive resistance to killing provides a psychological motivation to comply with, and sometimes go beyond, the rules of international law in order to minimize casualties.

Under the principles of humanity, actors must also respect the dignity of all human beings. This obligation is premised on the recognition that every human being has inherent worth that is both universal and inviolable.[74] Numerous international instruments—including the Universal Declaration of Human Rights, the International Covenant on Civil and Political Rights, the Vienna Declaration and Programme of Action adopted at the 1993 World Human Rights Conference, and regional treaties—enshrine the importance of dignity as a foundational principle of human rights law.[75] The Africa Charter on Human and Peoples’ Rights explicitly states that individuals have “the right to the respect of the dignity inherent in a human being.”[76]

While respect for human life involves minimizing the number of deaths and avoiding arbitrary or unjustified ones, respect for human dignity requires an appreciation of the gravity of a decision to kill.[77] The ICRC explained that it matters “not just if a person is killed or injured but how they are killed or injured, including the process by which these decisions are made.”[78] Before taking a life, an actor must truly understand the value of a human life and the significance of its loss. Humans should be recognized as unique individuals and not reduced to objects with merely instrumental or no value.[79] If an actor kills without taking into account the worth of the individual victim, the killing undermines the fundamental notion of human dignity and violates this principle of humanity.

Application to Fully Autonomous Weapons

It is highly unlikely that fully autonomous weapons would be able to respect human life and dignity. Their lack of legal and ethical judgment would interfere with their capacity to respect human life. For example, international humanitarian law’s proportionality test requires commanders to determine whether anticipated military advantage outweighs expected civilian harm on a case-by-case basis. Given the infinite number of contingencies that may arise on the battlefield, fully autonomous weapons could not be preprogrammed to make such determinations. The generally accepted standard for assessing proportionality is whether a “reasonable military commander” would have launched a particular attack,[80] and reasonableness requires making decisions based on ethical as well as legal considerations.[81] Unable to apply this standard to the proportionality balancing test, fully autonomous weapons would likely endanger civilians and potentially violate international humanitarian law.[82]

Fully autonomous weapons would also lack the instinctual human resistance to killing that can protect human life beyond the minimum requirements of the law.[83] An inclination to avoid killing comes naturally to most people because they have an innate appreciation for the inherent value of human life. Empirical research demonstrates the reluctance of human beings to take the lives of other humans. For example, a retired US Army Ranger who conducted extensive research on killing during armed conflict found that “there is within man an intense resistance to killing their fellow man. A resistance so strong that, in many circumstances, soldiers on the battlefield will die before they can overcome it.”[84] As inanimate objects, fully autonomous weapons could not lose their own life or understand the emotions associated with the loss of the life of a loved one. It is doubtful that a programmer could replicate in a robot a human’s natural inclination to avoid killing and to protect life with the complexity and nuance that would mirror human decision making.

Fully autonomous weapons could not respect human dignity, which relates to the process behind, rather the consequences of, the use of force.[85] As machines, they could truly comprehend neither the value of individual life nor the significance of its loss. They would base decisions to kill on algorithms without considering the humanity of a specific victim.[86] Moreover, these weapons would be programmed in advance of a scenario and could not account for the necessity of lethal force in a specific situation. In a CCW presentation as special rapporteur, Christof Heyns explained that:

to allow machines to determine when and where to use force against humans is to reduce those humans to objects; they are treated as mere targets. They become zeros and ones in the digital scopes of weapons which are programmed in advance to release force without the ability to consider whether there is no other way out, without a sufficient level of deliberate human choice about the matter.[87]

Mines Action Canada similarly concluded that “[d]eploying [fully autonomous weapons] in combat displays the belief that any human targeted in this way does not warrant the consideration of a live operator, thereby robbing that human life of its right to dignity.”[88] Allowing a robot to take a life when it cannot understand the inherent worth of that life or the necessity of taking it disrespects and demeans the person whose life is taken. It is thus irreconcilable with the principles of humanity enshrined in the Martens Clause.

When used in appropriate situations, AI has the potential to provide extraordinary benefits to humankind. Allowing robots to make determinations to kill humans, however, would be contrary to the Martens Clause, which merges law and morality. Limitations in the emotional, perceptive, and ethical capabilities of these machines significantly hinder their ability to treat other human beings humanely and to respect human life and dignity. Consequently, the use of these weapons would be incompatible with the principles of humanity as set forth in the Martens Clause.

V. The Dictates of Public Conscience

The Martens Clause states that in the absence of treaty law, the dictates of public conscience along with the principles of humanity protect civilians and combatants. The reference to “public conscience” instills the law with morality and requires that assessments of the means and methods of war account for the opinions of citizens and experts as well as governments. The reactions of these groups to the prospect of fully autonomous weapons makes it clear that the development, production, and use of such technology would raise serious concerns under the Martens Clause.

Definition

The dictates of public conscience refer to shared moral guidelines that shape the actions of states and individuals.[89] The use of the term “conscience” indicates that the dictates are based on a sense of morality, a knowledge of what is right and wrong.[90] According to philosopher Peter Asaro, conscience implies “feeling compelled by, or believing in, a specific moral obligation or duty.”[91] The adjective “public” clarifies that these dictates reflect the concerns of a range of people and entities. Building on the widely cited work of jurist and international humanitarian law expert Theodor Meron, this report looks to two sources in particular to determine what qualifies as the public conscience: the opinion of the public and the opinions of governments.[92]

Polling data and the experts’ views provide evidence of public opinion.[93] Surveys reveal the perspectives and beliefs of ordinary individuals. They can also illuminate nuanced differences in the values and understandings of laypeople. While informative, polls, by themselves, are not sufficient measures of the public conscience, in part because the responses can be influenced by the nature of the questions asked and do not necessarily reflect moral consideration.[94] The statements and actions of experts, who have often deliberated at length on the questions at issue, reflect a more in-depth understanding.[95] Their specific expertise may range from religion to technology to law, but they share a deep knowledge of the topic. The views they voice can thus shed light on the moral norms embraced by the informed public.[96]

Governments articulate their stances through policies and in written statements and oral interventions at diplomatic meetings and other public fora. Their positions reflect the perspectives of countries that differ in economic development, military prowess, political systems, religious and cultural traditions, and demographics. Government opinion can help illuminate opinio juris, an element of customary international law, which refers to a state’s belief that a certain practice is legally obligatory.[97]

Application to Fully Autonomous Weapons

The positions of individuals and governments around the world have demonstrated that fully autonomous weapons are highly problematic under the dictates of public conscience. Through opinion polls, open letters, oral and written statements, in-depth publications, and self-imposed guidelines, members of the public have shared their distress and outrage at the prospect of these weapons. Government officials from more than 100 countries have expressed similar concerns and spoken in favor of imposing limits on fully autonomous weapons.[98] While public opposition to fully autonomous weapons is not universal, collectively, these voices show that it is both widespread and growing.[99]

Opinion of the Public

Public opposition to the development, production, and use of fully autonomous weapons is significant and spreading. Several public opinion polls have revealed individuals’ resistance to these weapons.[100] These findings are mirrored in statements made by leaders in the relevant fields of disarmament and human rights, peace and religion, science and technology, and industry. While not comprehensive, the sources discussed below exemplify the nature and range of public opinion and provide evidence of the public conscience.

Surveys

Public opinion surveys conducted around the world have documented widespread opposition to the development, production, and use of these weapons. According to these polls, the majority of people surveyed found the prospect of delegating life-and-death decisions to machines unacceptable. For example, a 2013 survey of Americans, conducted by political science professor Charli Carpenter, found that 55 percent of respondents opposed the “trend toward using” fully autonomous weapons.[101] This position was shared roughly equally across genders, ages, and political ideologies. Interestingly, active duty military personnel, who understand the realities of armed conflict first hand, were among the strongest objectors; 73 percent expressed opposition to fully autonomous weapons.[102] The majority of respondents to this poll also supported a campaign to ban the weapons.[103] A more recent national survey of about 1,000 Belgians, which was released on July 3, 2018, found that 60 percent of respondents believed that “Belgium should support international efforts to ban the development, production and use of fully autonomous weapons.” Only 23 percent disagreed.[104]

International opinion polls have produced similar results. In 2015, the Open Robotics Initiative surveyed more than 1,000 individuals from 54 different countries and found that 56 percent of respondents opposed the development and use of what it called lethal autonomous weapons systems.[105] Thirty-four percent of all respondents rejected development and use because “humans should always be the one to make life/death decisions.”[106] Other motivations cited less frequently included the weapons’ unreliability, the risk of proliferation, and lack of accountability.[107] An even larger survey by Ipsos of 11,500 people from 25 countries produced similar results in 2017.[108] This poll explained that the United Nations was reviewing the “strategic, legal and moral implications of autonomous weapons systems” (equivalent to fully autonomous weapons) and asked participants how they felt about the weapons’ use. Fifty-six percent recorded their opposition.[109]

Nongovernmental and International Organizations

Providing further evidence of concerns under the dictates of public conscience, experts from a range of fields have felt compelled, especially for moral reasons, to call for a prohibition on the development, production, and use of fully autonomous weapons. The Campaign to Stop Killer Robots, a civil society coalition of 75 NGOs, is spearheading the effort to ban fully autonomous weapons.[110] Its NGO members are active in more than 30 countries and include groups with expertise in humanitarian disarmament, peace and conflict resolution, technology, human rights, and other relevant fields.[111] Human Rights Watch, which co-founded the campaign in 2012, serves as its coordinator. Over the past six years, the campaign’s member organizations have highlighted the many problems associated fully autonomous weapons through dozens of publications and statements made at diplomatic meetings and UN events, on social media, and in other fora.[112]

While different concerns resonate with different people, Steve Goose, director of Human Rights Watch’s Arms Division, highlighted the importance of the Martens Clause in his statement to the April 2018 CCW Group of Governmental Experts meeting. Goose said:

There are many reasons to reject [lethal autonomous weapons systems] (including legal, accountability, technical, operational, proliferation, and international security concerns), but ethical and moral concerns—which generate the sense of revulsion—trump all. These ethical concerns should compel High Contracting Parties of the Convention on Conventional Weapons to take into account the Martens Clause in international humanitarian law, under which weapons that run counter to the principles of humanity and the dictates of the public conscience should not be developed.[113]

The ICRC has encouraged states to assess fully autonomous weapons under the Martens Clause and observed that “[w]ith respect to the public conscience, there is a sense of deep discomfort with the idea of any weapon system that places the use of force beyond human control.”[114] The ICRC has repeatedly emphasized the legal and ethical need for human control over the critical functions of selecting and attacking targets. In April 2018, it made clear its view that “a minimum level of human control is required to ensure compliance with international humanitarian law rules that protect civilians and combatants in armed conflict, and ethical acceptability in terms of the principles of humanity and the public conscience.”[115] The ICRC explained that international humanitarian law “requires that those who plan, decide upon and carry out attacks make certain judgements in applying the norms when launching an attack. Ethical considerations parallel this requirement—demanding that human agency and intention be retained in decisions to use force.”[116] The ICRC concluded that a weapon system outside human control “would be unlawful by its very nature.”[117]

Peace and Faith Leaders

In 2014, more than 20 individuals and organizations that had received the Nobel Peace Prize issued a joint letter stating that they “whole-heartedly embrace [the] goal of a preemptive ban on fully autonomous weapons” and find it “unconscionable that human beings are expanding research and development of lethal machines that would be able to kill people without human intervention.”[118] The individual signatories to the letter included American activist Jody Williams, who led the civil society drive to ban landmines, along with heads of state and politicians, human rights and peace activists, a lawyer, a journalist, and a church leader.[119] Organizational signatory the Pugwash Conferences on Science and World Affairs and the Nobel Women’s Initiative, which helped spearhead the letter, are both on the steering committee of the Campaign to Stop Killer Robots.

Religious leaders have similarly united against fully autonomous weapons. In 2014, more than 160 faith leaders signed an “interreligious declaration calling on states to work towards a global ban on fully autonomous weapons.”[120] In language that implies concerns under the principles of humanity, the declaration describes such weapons as “an affront to human dignity and to the sacredness of life.”[121] The declaration further criticizes the idea of delegating life-and-death decisions to a machine because fully autonomous weapons have “no moral agency and, as a result, cannot be held responsible if they take an innocent life.”[122] The list of signatories encompassed representatives of Buddhism, Catholicism, Islam, Judaism, Protestantism, and Quakerism. Archbishop Desmond Tutu signed both this declaration and the Nobel Peace Laureates letter.

Science and Technology Experts

Individuals with technological expertise have also expressed opposition to fully autonomous weapons. The International Committee for Robot Arms Control (ICRAC), whose members study technology from various disciplines, raised the alarm in 2013 shortly after it co-founded the Campaign to Stop Killer Robots.[123] ICRAC issued a statement endorsed by more than 270 experts calling for a ban on the development and deployment of fully autonomous weapons.[124] Members of ICRAC noted “the absence of clear scientific evidence that robot weapons have, or are likely to have in the foreseeable future, the functionality required for accurate target identification, situational awareness or decisions regarding the proportional use of force” and concluded that “[d]ecisions about the application of violent force must not be delegated to machines.”[125] While the concerns emphasized in this statement focus on technology, as discussed above the inability to make proportionality decisions can run counter to the respect for life and principles of humanity.

In 2015, an even larger group of AI and robotics researchers issued an open letter. As of June 2018, more than 3,500 scientists, as well as more than 20,000 individuals, had signed this call for a ban.[126] The letter warns that these machines could become the “Kalashnikovs of tomorrow” if their development is not prevented.[127] It states that while the signatories “believe that AI has great potential to benefit humanity in many ways,” they “believe that a military AI arms race would not be beneficial for humanity. There are many ways in which AI can make battlefields safer for humans, especially civilians, without creating new tools for killing people.”[128]

In addition to demanding action from others, thousands of technology experts have committed not to engage in actions that would advance the development of fully autonomous weapons. At a world congress held in Stockholm in July 2018, leading AI researchers issued a pledge to “neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons.”[129] By the end of the month, more than 2,850 AI experts, scientists and other individuals along with 223 technology companies, societies and organizations from at least 36 countries had signed. The pledge, which cites moral, accountability, proliferation, and security-related concerns, finds that “the decision to take a human life should never be delegated to a machine.” It states, “There is a moral component to this position, that we should not allow machines to make life-taking decisions for which others—or nobody—will be culpable.”[130] According to the Future of Life Institute, which houses the pledge on its website, the pledge is necessary because “politicians have thus far failed to put into effect” any regulations and laws against lethal autonomous weapons systems.[131]

Industry

High-profile technology companies and their representatives have criticized fully autonomous weapons on various grounds. A Canadian robotics manufacturer, Clearpath Robotics, became the first company publicly to refuse to manufacture “weaponized robots that remove humans from the loop.”[132] In 2014, it pledged to “value ethics over potential future revenue.”[133] In a letter to the public, the company stated that it was motivated by its belief that “that the development of killer robots is unwise, unethical, and should be banned on an international scale.” Clearpath continued:

[W]ould a robot have the morality, sense, or emotional understanding to intervene against orders that are wrong or inhumane? No. Would computers be able to make the kinds of subjective decisions required for checking the legitimacy of targets and ensuring the proportionate use of force in the foreseeable future? No. Could this technology lead those who possess it to value human life less? Quite frankly, we believe this will be the case.[134]

The letter shows that fully autonomous weapons raise problems under both the principles of humanity and dictates of public conscience.

In August 2017, the founders and chief executive officers (CEOs) of 116 AI and robotics companies published a letter calling for CCW states parties to take action on autonomous weapons.[135] The letter opens by stating, “As companies building the technologies in Artificial Intelligence and Robotics that may be repurposed to develop autonomous weapons, we feel especially responsible in raising this alarm.”[136] The letter goes on to highlight the dangers to civilians, risk of an arms race, and possibility of destabilizing effects. It warns that “[o]nce this Pandora’s box is opened, it will be hard to close.”[137] In a similar vein in 2018, Scott Phoenix, CEO of Vicarious, a prominent AI development company, described developing autonomous weapons as among the “world’s worst ideas” because of the likelihood of defects in their codes and vulnerability to hacking.[138]

Google and the companies under its Alphabet group have been at the center of the debate about fully autonomous weapons on multiple occasions. DeepMind is an AI research company that was acquired by Google in 2014. In 2016, it submitted evidence to a UK parliamentary committee in which it described a ban on autonomous weapons as “the best approach to averting the harmful consequences that would arise from the development and use of such weapons.”[139] DeepMind voiced particular concern about the weapons’ “implications for global stability and conflict reduction.”[140] Two years later, more than 3,000 Google employees protested the company’s involvement with “Project Maven,” a US Department of Defense program that aims to use AI to autonomously process video footage taken by surveillance drones. The employees argued that the company should “not be in the business of war,”[141] and more than 1,100 academics supported them in a separate letter.[142] In June 2018, Google agreed to end its involvement in Project Maven once the contract expires in 2019, and it issued ethical principles committing not to develop AI for use in weapons. The principles state that Google is “not developing AI for use in weapons” and “will not design or deploy AI” for technology that causes “overall harm” or “contravenes widely accepted principles of international law and human rights.”[143]

Investors in the technology industry have also started to respond to the ethical concerns raised by fully autonomous weapons. In 2016, the Ethics Council of the Norwegian Government Pension Fund announced that it would monitor investments in the development of these weapons to decide whether they are counter to the council’s guidelines.[144] Johan H. Andresen, council chairman, reiterated that position in a panel presentation for CCW delegates in April 2018.[145]

Opinions of Governments

Governments from around the world have increasingly shared the views of experts and the broader public that the development, production, and use of weapons without meaningful human control is unacceptable. As of April 2018, 26 nations—from Africa, Asia, Europe, Latin America, and the Middle East—have called for a preemptive ban on fully autonomous weapons.[146] In addition, more than 100 states, including those of the Non-Aligned Movement (NAM), have called for a legally binding instrument on such weapons. In a joint statement, members of NAM cited “ethical, legal, moral and technical, as well as international peace and security related questions” as matters of concern.[147] While a complete analysis of government interventions over the past five years is beyond the scope of this report, overall the statements have demonstrated that countries oppose the loss of human control on moral as well as legal, technical, and other grounds. The opinions of these governments, reflective of public concerns, bolster the argument that fully autonomous weapons violate the dictates of public conscience.

The principles embedded in the Martens Clause have played a role in international discussions of fully autonomous weapons since they began in 2013. In that year, Christof Heyns, then Special Rapporteur on extrajudicial killing, submitted a report to the UN Human Rights Council on fully autonomous weapons, which he referred to as “lethal autonomous robotics.”[148] Emphasizing the importance of human control over life-and-death decisions, Heyns explained that “[i]t is an underlying assumption of most legal, moral and other codes that when the decision to take life or to subject people to other grave consequences is at stake, the decision-making power should be exercised by humans.”[149] He continued: “Delegating this process dehumanizes armed conflict even further and precludes a moment of deliberation in those cases where it may be feasible. Machines lack morality and mortality, and should as a result not have life and death powers over humans.”[150] Heyns also named the Martens Clause as one legal basis for his determination.[151] The 2013 report called for a moratorium on the development of fully autonomous weapons until the establishment of an “internationally agreed upon framework.”[152] A 2016 joint report by Heyns and Maina Kiai, then UN Special Rapporteur on freedom of peaceful assembly and of association, went a step further, recommending that “[a]utonomous weapons systems that require no meaningful human control should be prohibited.”[153]

In May 2013, in response to Heyns’s report, the UN Human Rights Council held the first discussions of the weapons at the international level.[154] Of the 20 nations that voiced their positions, many articulated concerns about the emerging technology. They often used language related to the Martens Clause or morality more generally. Ecuador explicitly referred to elements of the Martens Clause and stated that leaving life-and-death decisions to machines would contravene the public conscience.[155] Indonesia raised objections related to the principles of humanity discussed above. It criticized the “possible far-reaching effects on societal values, including fundamentally on the protection and the value of life” that could arise from the use of these weapons.[156] Russia recommended that “particular attention” be paid to the “serious implications for societal foundations, including the negating of human life.”[157] Pakistan called for a ban based on the precedent of the preemptive ban on blinding lasers, which was motivated in large part by the Martens Clause.[158] Brazil also addressed issues of morality; it said, “If the killing of one human being by another has been a challenge that legal, moral, and religious codes have grappled with since time immemorial, one may imagine the host of additional concerns to be raised by robots exercising the power of life and death over humans.”[159] While Human Rights Council member states also addressed other important risks of fully autonomous weapons, especially those related to security, morality was a dominant theme.[160]

Since the Human Rights Council’s session in 2013, most diplomatic discussions have taken place under the auspices of the Convention on Conventional Weapons.[161] States parties to the CCW held three informal meetings of experts on what they refer to as “lethal autonomous weapons systems” between 2014 and 2016.[162] At their 2016 Review Conference, they agreed to formalize discussions in a Group of Governmental Experts, a forum that is generally expected to produce an outcome such as a new CCW protocol.[163] More than 80 states participated in the most recent meeting of the group in April 2018. At that meeting, Austria noted that “that CCW’s engagement on lethal autonomous weapons stands testimony to the high level of concern about the risk that such weapons entail.”[164] It also serves as an indication that the public conscience is against this technology.

CCW states parties have highlighted the relevance of the Martens Clause at each of their meetings on lethal autonomous weapons systems. At the first meeting in May 2014, for example, Brazil described the Martens Clause as a “keystone” of international humanitarian law, which “‘allows us to navigate safely in new and dangerous waters’ and to feel confident that a human remains protected under the principles of humanity and the dictates of public conscience.”[165] Mexico found “there is absolutely no doubt that the development of these new technologies have to comply with [the] principles” of the Martens Clause.[166] At the second CCW experts meeting in April 2015, Russia described the Martens Clause as “an integral part of customary international law.”[167] Adopting a narrow interpretation of the provision, the United States said that “the Martens Clause is not a rule of international law that prohibits any particular weapon, much less a weapon that does not currently exist.” Nevertheless, it acknowledged that “the principles of humanity and the dictates of public conscience provide a relevant and important paradigm for discussing the moral or ethical issues related to the use of automation in warfare.”[168]

Several CCW states parties have based their objections to fully autonomous weapons on the Martens Clause and its elements. In a joint statement in April 2018, the African Group said that the “principles of humanity and dictates of public conscience as enunciated in the [Martens] Clause must be taken seriously.”[169] The African Group called for a preemptive ban on lethal autonomous weapons systems, declaring that its members found “it inhumane, abhorrent, repugnant, and against public conscience for humans to give up control to machines, allowing machines to decide who lives or dies, how many lives and whose life is acceptable as collateral damage when force is used.”[170] The Holy See condemned fully autonomous weapons because they “could never be a morally responsible subject. The unique human capacity for moral judgment and ethical decision-making is more than a complex collection of algorithms and such a capacity cannot be replaced by, or programed in, a machine.” The Holy See warned that autonomous weapons systems could find normal and acceptable “those behaviors that international law prohibits, or that, albeit not explicitly outlined, are still forbidden by dictates of morality and public conscience.”[171]

At the April 2018 meeting, other states raised issues under the Martens Clause more implicitly. Greece, for example, stated that “it is important to ensure that commanders and operators will remain on the loop of the decision making process in order to ensure the appropriate human judgment over the use of force, not only for reasons related to accountability but mainly to protect human dignity over the decision on life or death.”[172]

CCW states parties have considered a host of other issues surrounding lethal autonomous weapons systems over the past five years. They have highlighted, inter alia, the challenges of complying with international humanitarian law and international human rights law, the potential for an accountability gap, the risk of an arms race and a lower threshold for war, and the weapons’ vulnerability to hacking. Combined with the Martens Clause, these issues have led to convergence of views on the imperative of retaining some form of human control over weapons systems the use of force. In April 2018, Pakistan noted that “a general sense is developing among the High Contracting Parties that weapons with autonomous functions must remain under the direct control and supervision of humans at all times, and must comply with international law.”[173] Similarly, the European Union stated that its members “firmly believe that humans should make the decisions with regard to the use of lethal force, exert sufficient control over lethal weapons systems they use, and remain accountable for decisions over life and death.”[174]

The year 2018 has also seen increased parliamentary and UN calls for human control. In July, the Belgian Parliament adopted a resolution asking the government to support international efforts to ban the use of fully autonomous weapons.[175] The same month, the European Parliament voted to recommend that the UN Security Council:

work towards an international ban on weapon systems that lack human control over the use of force as requested by Parliament on various occasions and, in preparation of relevant meetings at UN level, to urgently develop and adopt a common position on autonomous weapon systems and to speak at relevant fora with one voice and act accordingly.[176]

In his 2018 disarmament agenda, the UN secretary-general noted, “All sides appear to be in agreement that, at a minimum, human oversight over the use of force is necessary.” He offered to support the efforts of states “to elaborate new measures, including through politically or legally binding arrangements, to ensure that humans remain at all times in control over the use of force.”[177] While the term remains to be defined, requiring “human control” is effectively the same as prohibiting weapons without such control. Therefore, the widespread agreement about the necessity of human control indicates that fully autonomous weapons contravene the dictates of public conscience.

VI. The Need for a Preemptive Ban Treaty

The Martens Clause fills a gap when existing treaties fail to specifically address a new situation or technology. In such cases, the principles of humanity and the dictates of public conscience serve as guides for interpreting international law and set standards against which to judge the means and methods of war. In so doing, they provide a baseline for adequately protecting civilians and combatants. The clause, which is a provision of international humanitarian law, also integrates moral considerations into legal analysis.

Existing treaties only regulate fully autonomous weapons in general terms, and thus an assessment of the weapons should take the Martens Clause into account. Because fully autonomous weapons raise concerns under both the principles of humanity and the dictates of public conscience, the Martens Clause points to the urgent need to adopt a specific international agreement on the emerging technology. To eliminate any uncertainty and comply with the elements of the Martens Clause, the new instrument should take the form of a preemptive ban on the development, production, and use of fully autonomous weapons.

There is no way to regulate fully autonomous weapons short of a ban that would ensure compliance with the principles of humanity. Fully autonomous weapons would lack the compassion and legal and ethical judgment that facilitate humane treatment of humans. They would face significant challenges in respecting human life. Even if they could comply with legal rules of protection, they would not have the capacity to respect human dignity.

Limiting the use of fully autonomous weapons to certain locations, such as those where civilians are rare, would not sufficiently address these problems. “Harm to others,” which the principle of humane treatment seeks to avoid, encompasses harm to civilian objects, which might be present where civilians themselves are not. The requirement to respect human dignity applies to combatants as well as civilians, so the weapons should not be permitted where enemy troops are positioned. Furthermore, allowing fully autonomous weapons to be developed and to enter national arsenals would raise the possibility of their misuse. They would likely proliferate to actors with no regard for human suffering and no respect for human life or dignity. The 2017 letter from technology company CEOs warned that the weapons could be “weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways.”[178] Regulation that allowed for the existence of fully autonomous weapons would open the door to violations of the principles of humanity.

A ban is also necessary to promote compliance with the dictates of public conscience. An overview of public opinion shows that ordinary people and experts alike have objected to the prospect of fully autonomous weapons on moral grounds. Public opinion surveys have illuminated significant opposition to these weapons based on the problems of delegating life-and-death decisions to machines. Experts have continually called for a preemptive ban on fully autonomous weapons, citing moral along with legal and security concerns. Regulation that allows for the existence of fully autonomous weapons, even if they could only be used in limited circumstances, would be inconsistent with the widespread public belief that fully autonomous weapons are morally wrong.

The statements of governments, another element of the public conscience, illuminate that opposition to weapons that lack human control over the selection and engagement of targets extends beyond individuals to countries. More than two dozen countries have explicitly called for a preemptive ban on these weapons,[179] and consensus is emerging regarding the need for human control over the use of force. As noted above, the requirement for human control is effectively equivalent to a ban on weapons without it. Therefore, a ban would best ensure that the dictates of public conscience are met.

The principles of humanity and dictates of public conscience bolster the case against fully autonomous weapons although as discussed above they are not the only matter of concern. Fully autonomous weapons are also problematic under other legal provisions and raise accountability, technological, and security risks. Collectively, these dangers to humanity more than justify the creation of new law that maintains human control over the use of force and prevents fully autonomous weapons from coming into existence.

Acknowledgments

Bonnie Docherty, senior researcher in the Arms Division of Human Rights Watch, was the lead writer and editor of this report. She is also the associate director of armed conflict and civilian protection and a lecturer on law at the International Human Rights Clinic (IHRC) at Harvard Law School. Danielle Duffield, Annie Madding, and Paras Shah, students in IHRC, made major contributions to the research, analysis, and writing of the report. Steve Goose, director of the Arms Division, and Mary Wareham, advocacy director of the Arms Division, edited the report. Dinah PoKempner, general counsel, and Tom Porteous, deputy program director, also reviewed the report. Peter Asaro, associate professor at the School of Media Studies at the New School, provided additional feedback on the report.

This report was prepared for publication by Marta Kosmyna, senior associate in the Arms Division, Fitzroy Hepkins, administrative manager, and Jose Martinez, senior coordinator. Russell Christian produced the cartoon for the report cover.